Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your understanding: Cool New Features in Python 3.10

Python 3.10 is out! Volunteers have been working on the new version since May 2020 to bring you a better, faster, and more secure Python. As of October 4, 2021, the first official version is available.

Each new version of Python brings a host of changes. You can read about all of them in the documentation. Here, you’ll get to learn about the coolest new features.

In this tutorial, you’ll learn about:

- Debugging with more helpful and precise error messages

- Using structural pattern matching to work with data structures

- Adding more readable and more specific type hints

- Checking the length of sequences when using

zip() - Calculating multivariable statistics

To try out the new features yourself, you need to run Python 3.10. You can get it from the Python homepage. Alternatively, you can use Docker with the latest Python image.

Free Bonus: 5 Thoughts On Python Mastery, a free course for Python developers that shows you the roadmap and the mindset you’ll need to take your Python skills to the next level.

Bonus Learning Materials: Check out Real Python Podcast Episode #81 for Python 3.10 tips and a discussion with members of the Real Python team.

Better Error Messages

Python is often lauded for being a user-friendly programming language. While this is true, there are certain parts of Python that could be friendlier. Python 3.10 comes with a host of more precise and constructive error messages. In this section, you’ll see some of the newest improvements. The full list is available in the documentation.

Think back to writing your first Hello World program in Python:

# hello.py

print("Hello, World!)

Maybe you created a file, added the famous call to print(), and saved it as hello.py. You then ran the program, eager to call yourself a proper Pythonista. However, something went wrong:

$ python hello.py

File "/home/rp/hello.py", line 3

print("Hello, World!)

^

SyntaxError: EOL while scanning string literal

There was a SyntaxError in the code. EOL, what does that even mean? You went back to your code, and after a bit of staring and searching, you realized that there was a missing quotation mark at the end of your string.

One of the more impactful improvements in Python 3.10 is better and more precise error messages for many common issues. If you run your buggy Hello World in Python 3.10, you’ll get a bit more help than in earlier versions of Python:

$ python hello.py

File "/home/rp/hello.py", line 3

print("Hello, World!)

^

SyntaxError: unterminated string literal (detected at line 3)

The error message is still a bit technical, but gone is the mysterious EOL. Instead, the message tells you that you need to terminate your string! There are similar improvements to many different error messages, as you’ll see below.

A SyntaxError is an error raised when your code is parsed, before it even starts to execute. Syntax errors can be tricky to debug because the interpreter provides imprecise or sometimes even misleading error messages. The following code is missing a curly brace to terminate the dictionary:

1# unterminated_dict.py

2

3months = {

4 10: "October",

5 11: "November",

6 12: "December"

7

8print(f"{months[10]} is the tenth month")

The missing closing curly brace that should have been on line 7 is an error. If you run this code with Python 3.9 or earlier, you’ll see the following error message:

File "/home/rp/unterminated_dict.py", line 8

print(f"{months[10]} is the tenth month")

^

SyntaxError: invalid syntax

The error message highlights line 8, but there are no syntactical problems in line 8! If you’ve experienced your share of syntax errors in Python, you might already know that the trick is to look at the lines before the one Python complains about. In this case, you’re looking for the missing closing brace on line 7.

In Python 3.10, the same code shows a much more helpful and precise error message:

File "/home/rp/unterminated_dict.py", line 3

months = {

^

SyntaxError: '{' was never closed

This points you straight to the offending dictionary and allows you to fix the issue in no time.

There are a few other ways to mess up dictionary syntax. A typical one is forgetting a comma after one of the items:

1# missing_comma.py

2

3months = {

4 10: "October"

5 11: "November",

6 12: "December",

7}

In this code, a comma is missing at the end of line 4. Python 3.10 gives you a clear suggestion on how to fix your code:

File "/home/real_python/missing_comma.py", line 4

10: "October"

^^^^^^^^^

SyntaxError: invalid syntax. Perhaps you forgot a comma?

You can add the missing comma and have your code back up and running in no time.

Another common mistake is using the assignment operator (=) instead of the equality comparison operator (==) when you’re comparing values. Previously, this would just cause another invalid syntax message. In the newest version of Python, you get some more advice:

>>> if month = "October":

File "<stdin>", line 1

if month = "October":

^^^^^^^^^^^^^^^^^

SyntaxError: invalid syntax. Maybe you meant '==' or ':=' instead of '='?

The parser suggests that you maybe meant to use a comparison operator or an assignment expression operator instead.

Take note of another nifty improvement in Python 3.10 error messages. The last two examples show how carets (^^^) highlight the whole offending expression. Previously, a single caret symbol (^) indicated just an approximate location.

The final error message improvement that you’ll play with for now is that attribute and name errors can now offer suggestions if you misspell an attribute or a name:

>>> import math

>>> math.py

AttributeError: module 'math' has no attribute 'py'. Did you mean: 'pi'?

>>> pint

NameError: name 'pint' is not defined. Did you mean: 'print'?

>>> release = "3.10"

>>> relaese

NameError: name 'relaese' is not defined. Did you mean: 'release'?

Note that the suggestions work for both built-in names and names that you define yourself, although they may not be available in all environments. If you like these kinds of suggestions, check out BetterErrorMessages, which offers similar suggestions in even more contexts.

The improvements you’ve seen in this section are just some of the many error messages that have gotten a face-lift. The new Python will be even more user-friendly than before, and hopefully, the new error messages will save you both time and frustration going forward.

Structural Pattern Matching

The biggest new feature in Python 3.10, probably both in terms of controversy and potential impact, is structural pattern matching. Its introduction has sometimes been referred to as switch ... case coming to Python, but you’ll see that structural pattern matching is much more powerful than that.

You’ll see three different examples that together highlight why this feature is called structural pattern matching and show you how you can use this new feature:

- Detecting and deconstructing different structures in your data

- Using different kinds of patterns

- Matching literal patterns

Structural pattern matching is a comprehensive addition to the Python language. To give you a taste of how you can take advantage of it in your own projects, the next three subsections will dive into some of the details. You’ll also see some links that can help you explore in even more depth if you want.

Deconstructing Data Structures

At its core, structural pattern matching is about defining patterns to which your data structures can be matched. In this section, you’ll study a practical example where you’ll work with data that are structured differently, even though the meaning is the same. You’ll define several patterns, and depending on which pattern matches your data, you’ll process your data appropriately.

This section will be a bit light on explanations of the possible patterns. Instead, it will try to give you an impression of the possibilities. The next section will step back and explain the patterns in more detail.

Time to match your first pattern! The following example uses a match ... case block to find the first name of a user by extracting it from a user data structure:

>>> user = {

... "name": {"first": "Pablo", "last": "Galindo Salgado"},

... "title": "Python 3.10 release manager",

... }

>>> match user:

... case {"name": {"first": first_name}}:

... pass

...

>>> first_name

'Pablo'

You can see structural pattern matching at work in the highlighted lines. user is a small dictionary with user information. The case line specifies a pattern that user is matched against. In this case, you’re looking for a dictionary with a "name" key whose value is a new dictionary. This nested dictionary has a key called "first". The corresponding value is bound to the variable first_name.

For a practical example, say that you’re processing user data where the underlying data model changes over time. Therefore, you need to be able to process different versions of the same data.

In the next example, you’ll use data from randomuser.me. This is a great API for generating random user data that you can use during testing and development. The API is also an example of an API that has changed over time. You can still access the old versions of the API.

You may expand the collapsed section below to see how you can use requests to obtain different versions of the user data using the API:

You can get a random user from the API using requests as follows:

# random_user.py

import requests

def get_user(version="1.3"):

"""Get random users"""

url = f"https://randomuser.me/api/{version}/?results=1"

response = requests.get(url)

if response:

return response.json()["results"][0]

get_user() gets one random user in JSON format. Note the version parameter. The structure of the returned data has changed quite a bit between earlier versions like "1.1" and the current version "1.3", but in each case, the actual user data are contained in a list inside the "results" array. The function returns the first—and only—user in this list.

At the time of writing, the latest version of the API is 1.3 and the data has the following structure:

{

"gender": "female",

"name": {

"title": "Miss",

"first": "Ilona",

"last": "Jokela"

},

"location": {

"street": {

"number": 4473,

"name": "Mannerheimintie"

},

"city": "Harjavalta",

"state": "Ostrobothnia",

"country": "Finland",

"postcode": 44879,

"coordinates": {

"latitude": "-6.0321",

"longitude": "123.2213"

},

"timezone": {

"offset": "+5:30",

"description": "Bombay, Calcutta, Madras, New Delhi"

}

},

"email": "ilona.jokela@example.com",

"login": {

"uuid": "632b7617-6312-4edf-9c24-d6334a6af52d",

"username": "brownsnake482",

"password": "biatch",

"salt": "ofk518ZW",

"md5": "6d589615ca44f6e583c85d45bf431c54",

"sha1": "cd87c931d579bdff77af96c09e0eea82d1edfc19",

"sha256": "6038ede83d4ce74116faa67fb3b1b2e6f6898e5749b57b5a0312bd46a539214a"

},

"dob": {

"date": "1957-05-20T08:36:09.083Z",

"age": 64

},

"registered": {

"date": "2006-07-30T18:39:20.050Z",

"age": 15

},

"phone": "07-369-318",

"cell": "048-284-01-59",

"id": {

"name": "HETU",

"value": "NaNNA204undefined"

},

"picture": {

"large": "https://randomuser.me/api/portraits/women/28.jpg",

"medium": "https://randomuser.me/api/portraits/med/women/28.jpg",

"thumbnail": "https://randomuser.me/api/portraits/thumb/women/28.jpg"

},

"nat": "FI"

}

One of the members that changed between different versions is "dob", the date of birth. Note that in version 1.3, this is a JSON object with two members, "date" and "age".

Note: By default, randomuser.me returns a random user. You can get the exact same user as in this example by setting the seed to 310:

url = f"https://randomuser.me/api/{version}/?results=1&seed=310"

You set the seed by adding &seed=310 to the URL. The full object returned by the API also contains some metadata in a member named "info". These metadata will include the version of the data as well as the seed used to create the random user.

Compare the result above with a version 1.1 random user:

{

"gender": "female",

"name": {

"title": "miss",

"first": "ilona",

"last": "jokela"

},

"location": {

"street": "7336 myllypuronkatu",

"city": "kurikka",

"state": "central ostrobothnia",

"postcode": 53740

},

"email": "ilona.jokela@example.com",

"login": {

"username": "blackelephant837",

"password": "sand",

"salt": "yofk518Z",

"md5": "b26367ea967600d679ee3e0b9bda012f",

"sha1": "87d2910595acba5b8e8aa8b00a841bab08580e2f",

"sha256": "73bd0d205d0dc83ae184ae222ff2e9de5ea4039119a962c4f97fabd5bbfa7aca"

},

"dob": "1966-04-17 11:57:01",

"registered": "2005-08-10 10:15:01",

"phone": "04-636-931",

"cell": "048-828-40-15",

"id": {

"name": "HETU",

"value": "366-9204"

},

"picture": {

"large": "https://randomuser.me/api/portraits/women/24.jpg",

"medium": "https://randomuser.me/api/portraits/med/women/24.jpg",

"thumbnail": "https://randomuser.me/api/portraits/thumb/women/24.jpg"

},

"nat": "FI"

}

Observe that in this older format, the value of the "dob" member is a plain string.

In this example, you’ll work with the information about the date of birth (dob) for each user. The structure of these data has changed between different versions of the Random User API:

# Version 1.1

"dob": "1966-04-17 11:57:01"

# Version 1.3

"dob": {"date": "1957-05-20T08:36:09.083Z", "age": 64}

Note that in version 1.1, the date of birth is represented as a simple string, while in version 1.3, it’s a JSON object with two members: "date" and "age". Say that you want to find the age of a user. Depending on the structure of your data, you’d either need to calculate the age based on the date of birth or look up the age if it’s already available.

Note: The value of age is accurate when you download the data. If you store the data, this value will eventually become outdated. If this is a concern, you should calculate the current age based on date.

Traditionally, you would detect the structure of the data with an if test, maybe based on the type of the "dob" field. You can approach this differently in Python 3.10. Now, you can use structural pattern matching instead:

1# random_user.py (continued)

2

3from datetime import datetime

4

5def get_age(user):

6 """Get the age of a user"""

7 match user:

8 case {"dob": {"age": int(age)}}:

9 return age

10 case {"dob": dob}:

11 now = datetime.now()

12 dob_date = datetime.strptime(dob, "%Y-%m-%d %H:%M:%S")

13 return now.year - dob_date.year

The match ... case construct is new in Python 3.10 and is how you perform structural pattern matching. You start with a match statement that specifies what you want to match. In this example, that’s the user data structure.

One or several case statements follow match. Each case describes one pattern, and the indented block beneath it says what should happen if there’s a match. In this example:

-

Line 8 matches a dictionary with a

"dob"key whose value is another dictionary with an integer (int) item named"age". The nameagecaptures its value. -

Line 10 matches any dictionary with a

"dob"key. The namedobcaptures its value.

One important feature of pattern matching is that at most one pattern will be matched. Since the pattern on line 10 matches any dictionary with "dob", it’s important that the more specific pattern on line 8 comes first.

Note: The age calculation done on line 13 is not very precise since it ignores dates. You can improve on this by explicitly comparing months and days to check whether the user has already celebrated their birthday this year. However, a better solution would be to use relativedelta from the dateutil package. By using relativedelta, you can calculate years directly.

Before looking closer at the details of the patterns and how they work, try calling get_age() with different data structures to see the result:

>>> import random_user

>>> users11 = random_user.get_user(version="1.1")

>>> random_user.get_age(users11)

55

>>> users13 = random_user.get_user(version="1.3")

>>> random_user.get_age(users13)

64

Your code can calculate the age correctly for both versions of the user data, which have different dates of birth.

Look closer at those patterns. The first pattern, {"dob": {"age": int(age)}}, matches version 1.3 of the user data:

{

...

"dob": {"date": "1957-05-20T08:36:09.083Z", "age": 64},

...

}

The first pattern is a nested pattern. The outer curly braces say that a dictionary with the key "dob" is required. The corresponding value should be a dictionary. This nested dictionary must match the subpattern {"age": int(age)}. In other words, it needs to have an "age" key with an integer value. That value is bound to the name age.

The second pattern, {"dob": dob}, matches the older version 1.1 of the user data:

{

...

"dob": "1966-04-17 11:57:01",

...

}

This second pattern is a simpler pattern than the first one. Again, the curly braces indicate that it will match a dictionary. However, any dictionary with a "dob" key is matched because there are no other restrictions specified. The value of that key is bound to the name dob.

The main takeaway is that you can describe the structure of your data using mostly familiar notation. One striking change, though, is that you can use names like dob and age, which aren’t yet defined. Instead, values from your data are bound to these names when a pattern matches.

You’ve explored some of the power of structural pattern matching in this example. In the next section, you’ll dive a bit more into the details.

Using Different Kinds of Patterns

You’ve seen an example of how you can use patterns to effectively unravel complicated data structures. Now, you’ll take a step back and look at the building blocks that make up this new feature. Many things come together to make it work. In fact, there are three Python Enhancement Proposals (PEPs) that describe structural pattern matching:

- PEP 634: Specification

- PEP 635: Motivation and Rationale

- PEP 636: Tutorial

These documents give you a lot of background and detail if you’re interested in a deeper dive than what follows.

Patterns are at the center of structural pattern matching. In this section, you’ll learn about some of the different kinds of patterns that exist:

- Mapping patterns match mapping structures like dictionaries.

- Sequence patterns match sequence structures like tuples and lists.

- Capture patterns bind values to names.

- AS patterns bind the value of subpatterns to names.

- OR patterns match one of several different subpatterns.

- Wildcard patterns match anything.

- Class patterns match class structures.

- Value patterns match values stored in attributes.

- Literal patterns match literal values.

You already used several of them in the example in the previous section. In particular, you used mapping patterns to unravel data stored in dictionaries. In this section, you’ll learn more about how some of these work. All the details are available in the PEPs mentioned above.

A capture pattern is used to capture a match to a pattern and bind it to a name. Consider the following recursive function that sums a list of numbers:

1def sum_list(numbers):

2 match numbers:

3 case []:

4 return 0

5 case [first, *rest]:

6 return first + sum_list(rest)

The first case on line 3 matches the empty list and returns 0 as its sum. The second case on line 5 uses a sequence pattern with two capture patterns to match lists with one or more elements. The first element in the list is captured and bound to the name first. The second capture pattern, *rest, uses unpacking syntax to match any number of elements. rest will bind to a list containing all elements of numbers except the first one.

sum_list() calculates the sum of a list of numbers by recursively adding the first number in the list and the sum of the rest of the numbers. You can use it as follows:

>>> sum_list([4, 5, 9, 4])

22

The sum of 4 + 5 + 9 + 4 is correctly calculated to be 22. As an exercise for yourself, you can try to trace the recursive calls to sum_list() to make sure you understand how the code sums the whole list.

Note: Capture patterns essentially assign values to variables. However, one limitation is that only undotted names are allowed. In other words, you can’t use a capture pattern to assign to a class or instance attribute directly.

sum_list() handles summing up a list of numbers. Observe what happens if you try to sum anything that isn’t a list:

>>> print(sum_list("4594"))

None

>>> print(sum_list(4594))

None

Passing a string or a number to sum_list() returns None. This occurs because none of the patterns match, and the execution continues after the match block. That happens to be the end of the function, so sum_list() implicitly returns None.

Often, though, you want to be alerted about failed matches. You can add a catchall pattern as the final case that handles this by raising an error, for example. You can use the underscore (_) as a wildcard pattern that matches anything without binding it to a name. You can add some error handling to sum_list() as follows:

def sum_list(numbers):

match numbers:

case []:

return 0

case [first, *rest]:

return first + sum_list(rest)

case _:

wrong_type = numbers.__class__.__name__

raise ValueError(f"Can only sum lists, not {wrong_type!r}")

The final case will match anything that doesn’t match the first two patterns. This will raise a descriptive error, for instance, if you try to calculate sum_list(4594). This is useful when you need to alert your users that some input was not matched as expected.

Your patterns are still not foolproof, though. Consider what happens if you try to sum a list of strings:

>>> sum_list(["45", "94"])

TypeError: can only concatenate str (not "int") to str

The base case returns 0, so therefore the summing only works for types that you can add with numbers. Python doesn’t know how to add numbers and text strings together. You can restrict your pattern to only match integers using a class pattern:

def sum_list(numbers):

match numbers:

case []:

return 0

case [int(first), *rest]:

return first + sum_list(rest)

case _:

raise ValueError(f"Can only sum lists of numbers")

Adding int() around first makes sure that the pattern only matches if the value is an integer. This might be too restrictive, though. Your function should be able to sum both integers and floating-point numbers, so how can you allow this in your pattern?

To check whether at least one out of several subpatterns match, you can use an OR pattern. OR patterns consist of two or more subpatterns, and the pattern matches if at least one of the subpatterns does. You can use this to match when the first element is either of type int or type float:

def sum_list(numbers):

match numbers:

case []:

return 0

case [int(first) | float(first), *rest]:

return first + sum_list(rest)

case _:

raise ValueError(f"Can only sum lists of numbers")

You use the pipe symbol (|) to separate the subpatterns in an OR pattern. Your function now allows summing a list of floating-point numbers:

>>> sum_list([45.94, 46.17, 46.72])

138.82999999999998

There’s a lot of power and flexibility within structural pattern matching, even more than what you’ve seen so far. Some things that aren’t covered in this overview are:

- Using guards to restrict patterns

- Using AS patterns to capture the value of subpatterns

- Using class patterns to match custom enums and data classes

If you’re interested, have a look in the documentation to learn more about these features as well. In the next section, you’ll learn about literal patterns and value patterns.

Matching Literal Patterns

A literal pattern is a pattern that matches a literal object like an explicit string or number. In a sense, this is the most basic kind of pattern and allows you to emulate switch ... case statements seen in other languages. The following example matches a specific name:

def greet(name):

match name:

case "Guido":

print("Hi, Guido!")

case _:

print("Howdy, stranger!")

The first case matches the literal string "Guido". In this case, you use _ as a wildcard to print a generic greeting whenever name is not "Guido". Such literal patterns can sometimes take the place of if ... elif ... else constructs and can play the same role that switch ... case does in some other languages.

One limitation with structural pattern matching is that you can’t directly match values stored in variables. Say that you’ve defined bdfl = "Guido". A pattern like case bdfl: will not match "Guido". Instead, this will be interpreted as a capture pattern that matches anything and binds that value to bdfl, effectively overwriting the old value.

You can, however, use a value pattern to match stored values. A value pattern looks a bit like a capture pattern but uses a previously defined dotted name that holds the value that will be matched against.

Note: A dotted name is a name with a dot (.) in it. In practice, this will reference an attribute of either a class, an instance of a class, an enumeration, or a module.

You can, for example, use an enumeration to create such dotted names:

import enum

class Pythonista(str, enum.Enum):

BDFL = "Guido"

FLUFL = "Barry"

def greet(name):

match name:

case Pythonista.BDFL:

print("Hi, Guido!")

case _:

print("Howdy, stranger!")

The first case now uses a value pattern to match Pythonista.BDFL, which is "Guido". Note that you can use any dotted name in a value pattern. You could, for example, have used a regular class or a module instead of the enumeration.

To see a bigger example of how to use literal patterns, consider the game of FizzBuzz. This is a counting game where you should replace some numbers with words according to the following rules:

- You replace numbers divisible by 3 with fizz.

- You replace numbers divisible by 5 with buzz.

- You replace numbers divisible by both 3 and 5 with fizzbuzz.

FizzBuzz is sometimes used to introduce conditionals in programming education and as a screening problem in interviews. Even though a solution is quite straightforward, Joel Grus has written a full book about different ways to program the game.

A typical solution in Python will use if ... elif ... else as follows:

def fizzbuzz(number):

mod_3 = number % 3

mod_5 = number % 5

if mod_3 == 0 and mod_5 == 0:

return "fizzbuzz"

elif mod_3 == 0:

return "fizz"

elif mod_5 == 0:

return "buzz"

else:

return str(number)

The % operator calculates the modulus, which you can use to test divisibility. Namely, if a modulus b is 0 for two numbers a and b, then a is divisible by b.

In fizzbuzz(), you calculate number % 3 and number % 5, which you then use to test for divisibility with 3 and 5. Note that you must do the test for divisibility with both 3 and 5 first. If not, numbers that are divisible by both 3 and 5 will be covered by either the "fizz" or the "buzz" cases instead.

You can check that your implementation gives the expected result:

>>> fizzbuzz(3)

fizz

>>> fizzbuzz(14)

14

>>> fizzbuzz(15)

fizzbuzz

>>> fizzbuzz(92)

92

>>> fizzbuzz(65)

buzz

You can confirm for yourself that 3 is divisible by 3, 65 is divisible by 5, and 15 is divisible by both 3 and 5, while 14 and 92 aren’t divisible by either 3 or 5.

An if ... elif ... else structure where you’re comparing one or a few variables several times over is quite straightforward to rewrite using pattern matching instead. For example, you can do the following:

def fizzbuzz(number):

mod_3 = number % 3

mod_5 = number % 5

match (mod_3, mod_5):

case (0, 0):

return "fizzbuzz"

case (0, _):

return "fizz"

case (_, 0):

return "buzz"

case _:

return str(number)

You match on both mod_3 and mod_5. Each case pattern then matches either the literal number 0 or the wildcard _ on the corresponding values.

Compare and contrast this version with the previous one. Note how the pattern (0, 0) corresponds to the test mod_3 == 0 and mod_5 == 0, while (0, _) corresponds to mod_3 == 0.

As you saw earlier, you can use an OR pattern to match on several different patterns. For example, since mod_3 can only take the values 0, 1, and 2, you can replace case (_, 0) with case (1, 0) | (2, 0). Remember that (0, 0) has already been covered.

Note: If you’ve been using switch ... case in other languages, you should remember that there’s no fallthrough in Python’s pattern matching. That means that at most one case will ever be executed, namely the first case that matches. This is different from languages like C and Java. You can handle most of the effects of fallthroughs with OR patterns.

The Python core developers have consciously chosen not to include switch ... case statements in the language earlier. However, there are some third-party packages that do, like switchlang, which adds a switch command that also works on earlier versions of Python.

Type Unions, Aliases, and Guards

Reliably, each new Python release brings some improvements to the static typing system. Python 3.10 is no exception. In fact, four different PEPs about typing accompany this new release:

- PEP 604: Allow writing union types as

X | Y - PEP 613: Explicit Type Aliases

- PEP 647: User-Defined Type Guards

- PEP 612: Parameter Specification Variables

PEP 604 will probably be the most widely used of these changes going forward, but you’ll get a brief overview of each of the features in this section.

You can use union types to declare that a variable can have one of several different types. For example, you’ve been able to type hint a function calculating the mean of a list of numbers, floats, or integers as follows:

from typing import List, Union

def mean(numbers: List[Union[float, int]]) -> float:

return sum(numbers) / len(numbers)

The annotation List[Union[float, int]] means that numbers should be a list where each element is either a floating-point number or an integer. This works well, but the notation is a bit verbose. Also, you need to import both List and Union from typing.

Note: The implementation of mean() looks straightforward, but there are actually several corner cases where it will fail. If you need to calculate means, you should use statistics.mean() instead.

In Python 3.10, you can replace Union[float, int] with the more succinct float | int. Combine this with the ability to use list instead of typing.List in type hints, which Python 3.9 introduced. You can then simplify your code while keeping all the type information:

def mean(numbers: list[float | int]) -> float:

return sum(numbers) / len(numbers)

The annotation of numbers is easier to read now, and as an added bonus, you didn’t need to import anything from typing.

A special case of union types is when a variable can have either a specific type or be None. You can annotate such optional types either as Union[None, T] or, equivalently, Optional[T] for some type T. There is no new, special syntax for optional types, but you can use the new union syntax to avoid importing typing.Optional:

address: str | None

In this example, address is allowed to be either None or a string.

You can also use the new union syntax at runtime in isinstance() or issubclass() tests:

>>> isinstance("mypy", str | int)

True

>>> issubclass(str, int | float | bytes)

False

Traditionally, you’ve used tuples to test for several types at once—for example, (str, int) instead of str | int. This old syntax will still work.

Type aliases allow you to quickly define new aliases that can stand in for more complicated type declarations. For example, say that you’re representing a playing card using a tuple of suit and rank strings and a deck of cards by a list of such playing card tuples. A deck of cards is then type hinted as list[tuple[str, str]].

To simplify type annotation, you define type aliases as follows:

Card = tuple[str, str]

Deck = list[Card]

This usually works okay. However, it’s often not possible for the type checker to know whether such a statement is a type alias or just the definition of a regular global variable. To help the type checker—or really, help the type checker help you—you can now explicitly annotate type aliases:

from typing import TypeAlias

Card: TypeAlias = tuple[str, str]

Deck: TypeAlias = list[Card]

Adding the TypeAlias annotation clarifies the intention, both to a type checker and to anyone reading your code.

Type guards are used to narrow down union types. The following function takes in either a string or None but always returns a tuple of strings representing a playing card:

def get_ace(suit: str | None) -> tuple[str, str]:

if suit is None:

suit = "♠"

return (suit, "A")

The highlighted line works as a type guard, and static type checkers are able to realize that suit is necessarily a string when it’s returned.

Currently, the type checkers can only use a few different constructs to narrow down union types in this way. With the new typing.TypeGuard, you can annotate custom functions that can be used to narrow down union types:

from typing import Any, TypeAlias, TypeGuard

Card: TypeAlias = tuple[str, str]

Deck: TypeAlias = list[Card]

def is_deck_of_cards(obj: Any) -> TypeGuard[Deck]:

# Return True if obj is a deck of cards, otherwise False

is_deck_of_cards() should return True or False depending on whether obj represents a Deck object or not. You can then use your guard function, and the type checker will be able to narrow down the types correctly:

def get_score(card_or_deck: Card | Deck) -> int:

if is_deck_of_cards(card_or_deck):

# Calculate score of a deck of cards

...

Inside of the if block, the type checker knows that card_or_deck is, in fact, of the type Deck. See PEP 647 for more details.

The final new typing feature is Parameter Specification Variables, which is related to type variables. Consider the definition of a decorator. In general, it looks something like the following:

import functools

from typing import Any, Callable, TypeVar

R = TypeVar("R")

def decorator(func: Callable[..., R]) -> Callable[..., R]:

@functools.wraps(func)

def wrapper(*args: Any, **kwargs: Any) -> R:

...

return wrapper

The annotations mean that the function returned by the decorator is a callable with some parameters and the same return type, R, as the function passed into the decorator. The ellipsis (...) in the function header correctly allows any number of parameters, and each of those parameters can be of any type. However, there’s no validation that the returned callable has the same parameters as the function that was passed in. In practice, this means that type checkers aren’t able to check decorated functions properly.

Unfortunately, you can’t use TypeVar for the parameters because you don’t know how many parameters the function will have. In Python 3.10, you’ll have access to ParamSpec in order to type hint these kinds of callables properly. ParamSpec works similarly to TypeVar but stands in for several parameters at once. You can rewrite your decorator as follows to take advantage of ParamSpec:

import functools

from typing import Callable, ParamSpec, TypeVar

P = ParamSpec("P")

R = TypeVar("R")

def decorator(func: Callable[P, R]) -> Callable[P, R]:

@functools.wraps(func)

def wrapper(*args: P.args, **kwargs: P.kwargs) -> R:

...

return wrapper

Note that you also use P when you annotate wrapper(). You can also use the new typing.Concatenate to add types to ParamSpec. See the documentation and PEP 612 for details and examples.

Stricter Zipping of Sequences

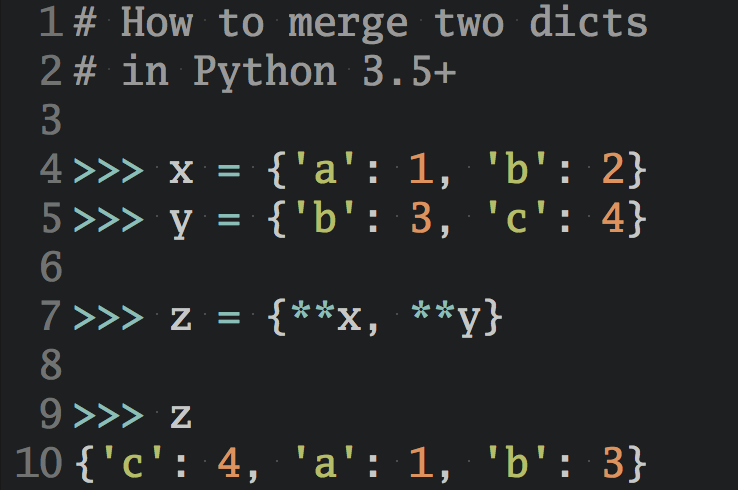

zip() is a built-in function in Python that can combine elements from several sequences. Python 3.10 introduces the new strict parameter, which adds a runtime test to check that all sequences being zipped have the same length.

As an example, consider the following table of Lego sets:

| Name | Set Number | Pieces |

|---|---|---|

| Louvre | 21024 | 695 |

| Diagon Alley | 75978 | 5544 |

| NASA Apollo Saturn V | 92176 | 1969 |

| Millennium Falcon | 75192 | 7541 |

| New York City | 21028 | 598 |

One way to represent these data in plain Python would be with each column as a list. It could look something like this:

>>> names = ["Louvre", "Diagon Alley", "Saturn V", "Millennium Falcon", "NYC"]

>>> set_numbers = ["21024", "75978", "92176", "75192", "21028"]

>>> num_pieces = [695, 5544, 1969, 7541, 598]

Note that you have three independent lists, but there’s an implicit correspondence between their elements. The first name ("Louvre"), the first set number ("21024"), and the first number of pieces (695) all describe the first Lego set.

Note: pandas is great for working with and manipulating these kinds of tabular data. However, if you’re doing smaller calculations, you may not want to introduce such a heavy dependency into your project.

zip() can be used to iterate over these three lists in parallel:

>>> for name, num, pieces in zip(names, set_numbers, num_pieces):

... print(f"{name} ({num}): {pieces} pieces")

...

Louvre (21024): 695 pieces

Diagon Alley (75978): 5544 pieces

Saturn V (92176): 1969 pieces

Millennium Falcon (75192): 7541 pieces

NYC (21028): 598 pieces

Note how each line collects information from all three lists and shows information about one particular set. This is a very common pattern that’s used in a lot of different Python code, including in the standard library.

You can also add list() to collect the contents of all three lists in a single, nested list of tuples:

>>> list(zip(names, set_numbers, num_pieces))

[('Louvre', '21024', 695),

('Diagon Alley', '75978', 5544),

('Saturn V', '92176', 1969),

('Millennium Falcon', '75192', 7541),

('NYC', '21028', 598)]

Note how the nested list closely resembles the original table.

The dark side of using zip() is that it’s quite easy to introduce a subtle bug that can be hard to discover. Note what happens if there’s a missing item in one of your lists:

>>> set_numbers = ["21024", "75978", "75192", "21028"] # Saturn V missing

>>> list(zip(names, set_numbers, num_pieces))

[('Louvre', '21024', 695),

('Diagon Alley', '75978', 5544),

('Saturn V', '75192', 1969),

('Millennium Falcon', '21028', 7541)]

All the information about the New York City set disappeared! Additionally, the set numbers for Saturn V and Millennium Falcon are wrong. If your datasets are bigger, these kinds of errors can be very hard to discover. And even when you observe that something’s wrong, it’s not always easy to diagnose and fix.

The issue is that you assumed that the three lists have the same number of elements and that the information is in the same order in each list. After set_numbers gets corrupted, this assumption is no longer true.

PEP 618 introduces a new strict keyword parameter to zip() that you can use to confirm all sequences have the same length. In your example, it would raise an error alerting you to the corrupted list:

>>> list(zip(names, set_numbers, num_pieces, strict=True))

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: zip() argument 2 is shorter than argument 1

When the iteration reaches the New York City Lego set, the second argument set_numbers is already exhausted, while there are still elements left in the first argument names. Instead of silently giving the wrong result, your code fails with an error, and you can take action to find and fix the mistake.

There are use cases when you want to combine sequences of unequal length. Expand the box below to see how zip() and itertools.zip_longest() handle these:

The following idiom divides the Lego sets into pairs:

>>> num_per_group = 2

>>> list(zip(*[iter(names)] * num_per_group))

[('Louvre', 'Diagon Alley'), ('Saturn V', 'Millennium Falcon')]

There are five sets, a number that doesn’t divide evenly into pairs. In this case, the default behavior of zip(), where the last element is dropped, might make sense. You could use strict=True here as well, but that would raise an error when your list can’t be split into pairs. A third option, which could be the best in this case, is to use zip_longest() from the itertools standard library.

As the name suggests, zip_longest() combines sequences until the longest sequence is exhausted. If you use zip_longest() to divide the Lego sets, it becomes more explicit that New York City doesn’t have any pairing:

>>> from itertools import zip_longest

>>> list(zip_longest(*[iter(names)] * num_per_group, fillvalue=""))

[('Louvre', 'Diagon Alley'),

('Saturn V', 'Millennium Falcon'),

('NYC', '')]

Note that 'NYC' shows up in the last tuple together with an empty string. You can control what’s filled in for missing values with the fillvalue parameter.

While strict is not really adding any new functionality to zip(), it can help you avoid those hard-to-find bugs.

New Functions in the statistics Module

The statistics module was added to the standard library all the way back in 2014 with the release of Python 3.4. The intent of statistics is to make statistical calculations at the level of graphing calculators available in Python.

Note: statistics isn’t designed to offer dedicated numerical data types or full-featured statistical modeling. If the standard library doesn’t cover your needs, have a look at third-party packages like NumPy, SciPy, pandas, statsmodels, PyMC3, scikit-learn, or seaborn.

Python 3.10 adds a few multivariable functions to statistics:

correlation()to calculate Pearson’s correlation coefficient for two variablescovariance()to calculate sample covariance for two variableslinear_regression()to calculate the slope and intercept in a linear regression

You can use each function to describe a certain aspect of the relationship between two variables. As an example, say that you have data from a set of blog posts—the number of words in each blog post and the number of views each post has had over some time period:

>>> words = [7742, 11539, 16898, 13447, 4608, 6628, 2683, 6156, 2623, 6948]

>>> views = [8368, 5901, 3978, 3329, 2611, 2096, 1515, 1177, 814, 467]

You now want to investigate whether there’s any (linear) relationship between the number of words and number of views. In Python 3.10, you can calculate the correlation between words and views with the new correlation() function:

>>> import statistics

>>> statistics.correlation(words, views)

0.454180067865917

The correlation between two variables is always a number between -1 and 1. If it’s close to 0, then there’s little correspondence between them, while a correlation close to -1 or 1 indicates that the behaviors of the two variables tend to follow each other. In this example, a correlation of 0.45 indicates that there’s a tendency for posts with more words to have more views, although it’s not a strong connection.

Note: The common adage correlation does not imply causation is important to keep in mind. Even if you find that two variables are strongly correlated, you can’t conclude that one is the cause of the other.

You can also calculate the covariance between words and views. The covariance is another measure of the joint variability between two variables. You can calculate it with covariance():

>>> import statistics

>>> statistics.covariance(words, views)

5292289.977777777

In contrast to correlation, covariance is an absolute measure. It should be interpreted in the context of the variability within the variables themselves. In fact, you can normalize the covariance by the standard deviation of each variable to recover Pearson’s correlation coefficient:

>>> import statistics

>>> cov = statistics.covariance(words, views)

>>> σ_words, σ_views = statistics.stdev(words), statistics.stdev(views)

>>> cov / (σ_words * σ_views)

0.454180067865917

Note that this matches your earlier correlation coefficient exactly.

A third way of looking at the linear correspondence between the two variables is through simple linear regression. You do the linear regression by calculating two numbers, slope and intercept, so that the (squared) error is minimized in the approximation number of views = slope × number of words + intercept.

In Python 3.10, you can use linear_regression():

>>> import statistics

>>> statistics.linear_regression(words, views)

LinearRegression(slope=0.2424443064354672, intercept=1103.6954940247645)

Based on this regression, a post with 10,074 words could expect about 0.2424 × 10074 + 1104 = 3546 views. However, as you saw earlier, the correlation between the number of words and the number of views is quite weak. Therefore, you shouldn’t expect this prediction to be very accurate.

The LinearRegression object is a named tuple. This means that you can unpack the slope and intercept directly:

>>> import statistics

>>> slope, intercept = statistics.linear_regression(words, views)

>>> slope * 10074 + intercept

3546.0794370556605

Here, you use slope and intercept to predict the number of views on a blog post with 10,074 words.

You still want to use some of the more advanced packages like pandas and statsmodels if you do a lot of statistical analysis. With the new additions to statistics in Python 3.10, however, you have the chance to do basic analysis more easily without bringing in third-party dependencies.

Other Pretty Cool Features

So far, you’ve seen the biggest and most impactful new features in Python 3.10. In this section, you’ll get a glimpse of a few of the other changes that the new version brings along. If you’re curious about all the changes made for this new version, check out the documentation.

Default Text Encodings

When you open a text file, the default encoding used to interpret the characters is system dependent. In particular, locale.getpreferredencoding() is used. On Mac and Linux, this usually returns "UTF-8", while the result on Windows is more varied.

You should therefore always specify an encoding when you attempt to open a text file:

with open("some_file.txt", mode="r", encoding="utf-8") as file:

... # Do something with file

If you don’t explicitly specify an encoding, the preferred locale encoding is used, and you could experience that a file that can be read on one computer fails to open on another.

Python 3.7 introduced UTF-8 mode, which allows you to force your programs to use UTF-8 encoding independent of the locale encoding. You can enable UTF-8 mode by giving the -X utf8 command-line option to the python executable or by setting the PYTHONUTF8 environment variable.

In Python 3.10, you can activate a warning that will tell you when a text file is opened without a specified encoding. Consider the following script, which doesn’t specify an encoding:

# mirror.py

import pathlib

import sys

def mirror_file(filename):

for line in pathlib.Path(filename).open(mode="r"):

print(f"{line.rstrip()[::-1]:>72}")

if __name__ == "__main__":

for filename in sys.argv[1:]:

mirror_file(filename)

The program will echo one or more text files back to the console, but with each line reversed. Run the program on itself with the encoding warning enabled:

$ python -X warn_default_encoding mirror.py mirror.py

/home/rp/mirror.py:7: EncodingWarning: 'encoding' argument not specified

for line in pathlib.Path(filename).open(mode="r"):

yp.rorrim #

bilhtap tropmi

sys tropmi

:)emanelif(elif_rorrim fed

:)"r"=edom(nepo.)emanelif(htaP.bilhtap ni enil rof

)"}27>:]1-::[)(pirtsr.enil{"f(tnirp

:"__niam__" == __eman__ fi

:]:1[vgra.sys ni emanelif rof

)emanelif(elif_rorrim

Note the EncodingWarning printed to the console. The command-line option -X warn_default_encoding activates it. The warning will disappear if you specify an encoding—for example, encoding="utf-8"—when you open the file.

There are times when you want to use the user-defined local encoding. You can still do so by explicitly using encoding="locale". However, it’s recommended to use UTF-8 whenever possible. You can check out PEP 597 for more information.

Asynchronous Iteration

Asynchronous programming is a powerful programming paradigm that’s been available in Python since version 3.5. You can recognize an asynchronous program by its use of the async keyword or special methods that start with .__a like .__aiter__() or .__aenter__().

In Python 3.10, two new asynchronous built-in functions are added: aiter() and anext(). In practice, these functions call the .__aiter__() and .__anext__() special methods—analogous to the regular iter() and next()—so no new functionality is added. These are convenience functions that make your code more readable.

In other words, in the newest version of Python, the following statements—where things is an asynchronous iterable—are equivalent:

>>> it = things.__aiter__()

>>> it = aiter(things)

In either case, it ends up as an asynchronous iterator. Expand the following box to see a complete example using aiter() and anext():

The following program counts the number of lines in several files. In practice, you use Python’s ability to iterate over files to count the number of lines. The script uses asynchronous iteration in order to handle several files concurrently.

Note that you need to install the third-party aiofiles package with pip before running this code:

# line_count.py

import asyncio

import sys

import aiofiles

async def count_lines(filename):

"""Count the number of lines in the given file"""

num_lines = 0

async with aiofiles.open(filename, mode="r") as file:

lines = aiter(file)

while True:

try:

await anext(lines)

num_lines += 1

except StopAsyncIteration:

break

print(f"{filename}: {num_lines}")

async def count_all_files(filenames):

"""Asynchronously count lines in all files"""

tasks = [asyncio.create_task(count_lines(f)) for f in filenames]

await asyncio.gather(*tasks)

if __name__ == "__main__":

asyncio.run(count_all_files(filenames=sys.argv[1:]))

asyncio is used to create and run one asynchronous task per filename. count_lines() opens one file asynchronously and iterates through it using aiter() and anext() in order to count the number of lines.

See PEP 525 to learn more about asynchronous iteration.

Context Manager Syntax

Context managers are great for managing resources in your programs. Until recently, though, their syntax has included an uncommon wart. You haven’t been allowed to use parentheses to break long with statements like this:

with (

read_path.open(mode="r", encoding="utf-8") as read_file,

write_path.open(mode="w", encoding="utf-8") as write_file,

):

...

In earlier versions of Python, this causes an invalid syntax error message. Instead, you need to use a backslash (\) if you want to control where you break your lines:

with read_path.open(mode="r", encoding="utf-8") as read_file, \

write_path.open(mode="w", encoding="utf-8") as write_file:

...

While explicit line continuation with backslashes is possible in Python, PEP 8 discourages it. The Black formatting tool avoids backslashes completely.

In Python 3.10, you’re now allowed to add parentheses around with statements to your heart’s content. Especially if you’re employing several context managers at once, like in the example above, this can help improve the readability of your code. Python’s documentation shows a few other possibilities with this new syntax.

One small fun fact: parenthesized with statements actually work in version 3.9 of CPython. Their implementation came almost for free with the introduction of the PEG parser in Python 3.9. The reason that this is called a Python 3.10 feature is that using the PEG parser is voluntary in Python 3.9, while Python 3.9, with the old LL(1) parser, doesn’t support parenthesized with statements.

Modern and Secure SSL

Security can be challenging! A good rule of thumb is to avoid rolling your own security algorithms and instead rely on established packages.

Python uses OpenSSL for different cryptographic features that are exposed in the hashlib, hmac, and ssl standard library modules. Your system can manage OpenSSL, or a Python installer can include OpenSSL.

Python 3.9 supports using any of the OpenSSL versions 1.0.2 LTS, 1.1.0, and 1.1.1 LTS. Both OpenSSL 1.0.2 LTS and OpenSSL 1.1.0 are past their lifetime, so Python 3.10 will only support OpenSSL 1.1.1 LTS, as described in the following table:

| Open SSL version | Python 3.9 | Python 3.10 | End-of-life |

|---|---|---|---|

| 1.0.2 LTS | ✔ | ✖ | December 20, 2019 |

| 1.1.0 | ✔ | ✖ | September 10, 2019 |

| 1.1.1 LTS | ✔ | ✔ | September 11, 2023 |

This end of support for older versions will only affect you if you need to upgrade the system Python on an older operating system. If you use macOS or Windows, or if you install Python from python.org or use (Ana)Conda, you’ll see no change.

However, Ubuntu 18.04 LTS uses OpenSSL 1.1.0, while Red Hat Enterprise Linux (RHEL) 7 and CentOS 7 both use OpenSSL 1.0.2 LTS. If you need to run Python 3.10 on these systems, you should look at installing it yourself using either the python.org or Conda installer.

Dropping support for older versions of OpenSSL will make Python more secure. It’ll also help the Python developers in that code will be easier to maintain. Ultimately, this helps you because your Python experience will be more robust. See PEP 644 for more details.

More Information About Your Python Interpreter

The sys module contains a lot of information about your system, the current Python runtime, and the script currently being executed. You can, for example, inquire about the paths where Python looks for modules with sys.path and see all modules that have been imported in the current session with sys.modules.

In Python 3.10, sys has two new attributes. First, you can now get a list of the names of all modules in the standard library:

>>> import sys

>>> len(sys.stdlib_module_names)

302

>>> sorted(sys.stdlib_module_names)[-5:]

['zipapp', 'zipfile', 'zipimport', 'zlib', 'zoneinfo']

Here, you can see that there are around 300 modules in the standard library, several of which start with the letter z. Note that only top-level modules and packages are listed. Subpackages like importlib.metadata don’t get a separate entry.

You will probably not be using sys.stdlib_module_names all that often. Still, the list ties in nicely with similar introspection features like keyword.kwlist and sys.builtin_module_names.

One possible use case for the new attribute is to identify which of the currently imported modules are third-party dependencies:

>>> import pandas as pd

>>> import sys

>>> {m for m in sys.modules if "." not in m} - sys.stdlib_module_names

{'__main__', 'numpy', '_cython_0_29_24', 'dateutil', 'pytz',

'six', 'pandas', 'cython_runtime'}

You find the imported top-level modules by looking at names in sys.modules that don’t have a dot in their name. By comparing them to the standard library module names, you find that numpy, dateutil, and pandas are some of the imported third-party modules in this example.

The other new attribute is sys.orig_argv. This is related to sys.argv, which holds the command-line arguments given to your program when it was started. In contrast, sys.orig_argv lists the command-line arguments passed to the python executable itself. Consider the following example:

# argvs.py

import sys

print(f"argv: {sys.argv}")

print(f"orig_argv: {sys.orig_argv}")

This script echoes back the orig_argv and argv lists. Run it to see how the information is captured:

$ python -X utf8 -O argvs.py 3.10 --upgrade

argv: ['argvs.py', '3.10', '--upgrade']

orig_argv: ['python', '-X', 'utf8', '-O', 'argvs.py', '3.10', '--upgrade']

Essentially, all arguments—including the name of the Python executable—end up in orig_argv. This is in contrast to argv, which only contains the arguments that aren’t handled by python itself.

Again, this is not a feature that you’ll use a lot. If your program needs to concern itself with how it’s being run, you’re usually better off relying on information that’s already exposed instead of trying to parse this list. For example, you can choose to use the strict zip() mode only when your script is not running with the optimized flag, -O, like this:

list(zip(names, set_numbers, num_pieces, strict=__debug__))

The __debug__ flag is set when the interpreter starts. It’ll be False if you’re running python with -O or -OO specified, and True otherwise. Using __debug__ is usually preferable to "-O" not in sys.orig_argv or some similar construct.

One of the motivating use cases for sys.orig_argv is that you can use it to spawn a new Python process with the same or modified command-line arguments as your current process.

Future Annotations

Annotations were introduced in Python 3 to give you a way to attach metadata to variables, function parameters, and return values. They are most commonly used to add type hints to your code.

One challenge with annotations is that they must be valid Python code. For one thing, this makes it hard to type hint recursive classes. PEP 563 introduced postponed evaluation of annotations, making it possible to annotate with names that haven’t yet been defined. Since Python 3.7, you can activate postponed evaluation of annotations with a __future__ import:

from __future__ import annotations

The intention was that postponed evaluation would become the default at some point in the future. After the 2020 Python Language Summit, it was decided to make this happen in Python 3.10.

However, after more testing, it became clear that postponed evaluation didn’t work well for projects that use annotations at runtime. Key people in the FastAPI and the Pydantic projects voiced their concerns. At the last minute, it was decided to reschedule these changes for Python 3.11.

To ease the transition into future behavior, a few changes have been made in Python 3.10 as well. Most importantly, a new inspect.get_annotations() function has been added. You should call this to access annotations at runtime:

>>> import inspect

>>> def mean(numbers: list[int | float]) -> float:

... return sum(numbers) / len(numbers)

...

>>> inspect.get_annotations(mean)

{'numbers': list[int | float], 'return': <class 'float'>}

Check out Annotations Best Practices for details.

How to Detect Python 3.10 at Runtime

Python 3.10 is the first version of Python with a two-digit minor version number. While this is mostly an interesting fun fact and an indication that Python 3 has been around for quite some time, it does also have some practical consequences.

When your code needs to do something specific based on the version of Python at runtime, you’ve gotten away with doing a lexicographical comparison of version strings until now. While it’s never been good practice, it’s been possible to do the following:

# bad_version_check.py

import sys

# Don't do the following

if sys.version < "3.6":

raise SystemExit("Only Python 3.6 and above is supported")

In Python 3.10, this code will raise SystemExit and stop your program. This happens because, as strings, "3.10" is less than "3.6".

The correct way to compare version numbers is to use tuples of numbers:

# good_version_check.py

import sys

if sys.version_info < (3, 6):

raise SystemExit("Only Python 3.6 and above is supported")

sys.version_info is a tuple object you can use for comparisons.

If you’re doing these kinds of comparisons in your code, you should check your code with flake8-2020 to make sure you’re handling versions correctly:

$ python -m pip install flake8-2020

$ flake8 bad_version_check.py good_version_check.py

bad_version_check.py:3:4: YTT103 `sys.version` compared to string

(python3.10), use `sys.version_info`

With the flake8-2020 extension activated, you’ll get a recommendation about replacing sys.version with sys.version_info.

So, Should You Upgrade to Python 3.10?

You’ve now seen the coolest features of the newest and latest version of Python. The question now is whether you should upgrade to Python 3.10, and if yes, when you should do so. There are two different aspects to consider when thinking about upgrading to Python 3.10:

- Should you upgrade your environment so that you run your code with the Python 3.10 interpreter?

- Should you write your code using the new Python 3.10 features?

Clearly, if you want to test out structural pattern matching or any of the other cool new features you’ve read about here, you need Python 3.10. It’s possible to install the latest version side by side with your current Python version. A straightforward way to do this is to use an environment manager like pyenv or Conda. You can also use Docker to run Python 3.10 without installing it locally.

Python 3.10 has been through about five months of beta testing, so there shouldn’t be any big issues with starting to use it for your own development. You may find that some of your dependencies don’t immediately have wheels for Python 3.10 available, which makes them more cumbersome to install. But in general, using the newest Python for local development is fairly safe.

As always, you should be careful before upgrading your production environment. Be vigilant about testing that your code runs well on the new version. In particular, you want to be on the lookout for features that are deprecated or removed.

Whether you can start using the new features in your code or not depends on your user base and the environment where your code is running. If you can guarantee that Python 3.10 is available, then there’s no danger in using the new union type syntax or any other new feature.

If you’re distributing an app or a library that’s used by others instead, you may want to be a bit more conservative. Currently, Python 3.6 is the oldest officially supported Python version. It reaches end-of-life in December 2021, after which Python 3.7 will be the minimum supported version.

The documentation includes a useful guide about porting your code to Python 3.10. Check it out for more details!

Conclusion

The release of a new Python version is always worth celebrating. Even if you can’t start using the new features right away, they’ll become broadly available and part of your daily life within a few years.

In this tutorial, you’ve seen new features like:

- Friendlier error messages

- Powerful structural pattern matching

- Type hint improvements

- Safer combination of sequences

- New statistics functions

For more Python 3.10 tips and a discussion with members of the Real Python team, check out Real Python Podcast Episode #81.

Have fun trying out the new features! Share your experiences in the comments below.

Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your understanding: Cool New Features in Python 3.10