Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your understanding: Binary, Bytes, and Bitwise Operators in Python

Computers store all kinds of information as a stream of binary digits called bits. Whether you’re working with text, images, or videos, they all boil down to ones and zeros. Python’s bitwise operators let you manipulate those individual bits of data at the most granular level.

You can use bitwise operators to implement algorithms such as compression, encryption, and error detection as well as to control physical devices in your Raspberry Pi project or elsewhere. Often, Python isolates you from the underlying bits with high-level abstractions. You’re more likely to find the overloaded flavors of bitwise operators in practice. But when you work with them in their original form, you’ll be surprised by their quirks!

In this tutorial, you’ll learn how to:

- Use Python bitwise operators to manipulate individual bits

- Read and write binary data in a platform-agnostic way

- Use bitmasks to pack information on a single byte

- Overload Python bitwise operators in custom data types

- Hide secret messages in digital images

To get the complete source code of the digital watermarking example, and to extract a secret treat hidden in an image, click the link below:

Get the Source Code: Click here to get the source code you’ll use to learn about Python’s bitwise operators in this tutorial.

Overview of Python’s Bitwise Operators

Python comes with a few different kinds of operators, such as the arithmetic, logical, and comparison operators. You can think of them as functions that take advantage of a more compact prefix and infix syntax.

Note: Python does not include postfix operators like the increment (i++) or decrement (i--) operators available in C.

Bitwise operators look virtually the same across different programming languages:

| Operator | Example | Meaning |

|---|---|---|

& |

a & b |

Bitwise AND |

| |

a | b |

Bitwise OR |

^ |

a ^ b |

Bitwise XOR (exclusive OR) |

~ |

~a |

Bitwise NOT |

<< |

a << n |

Bitwise left shift |

>> |

a >> n |

Bitwise right shift |

As you can see, they’re denoted with strange-looking symbols instead of words. This makes them stand out in Python as slightly less verbose than you might be used to seeing. You probably wouldn’t be able to figure out their meaning just by looking at them.

Note: If you’re coming from another programming language such as Java, then you’ll immediately notice that Python is missing the unsigned right shift operator denoted by three greater-than signs (>>>).

This has to do with how Python represents integers internally. Since integers in Python can have an infinite number of bits, the sign bit doesn’t have a fixed position. In fact, there’s no sign bit at all in Python!

Most of the bitwise operators are binary, which means that they expect two operands to work with, typically referred to as the left operand and the right operand. Bitwise NOT (~) is the only unary bitwise operator since it expects just one operand.

All binary bitwise operators have a corresponding compound operator that performs an augmented assignment:

| Operator | Example | Equivalent to |

|---|---|---|

&= |

a &= b |

a = a & b |

|= |

a |= b |

a = a | b |

^= |

a ^= b |

a = a ^ b |

<<= |

a <<= n |

a = a << n |

>>= |

a >>= n |

a = a >> n |

These are shorthand notations for updating the left operand in place.

That’s all there is to Python’s bitwise operator syntax! Now you’re ready to take a closer look at each of the operators to understand where they’re most useful and how you can use them. First, you’ll get a quick refresher on the binary system before looking at two categories of bitwise operators: the bitwise logical operators and the bitwise shift operators.

Binary System in Five Minutes

Before moving on, take a moment to brush up your knowledge of the binary system, which is essential to understanding bitwise operators. If you’re already comfortable with it, then go ahead and jump to the Bitwise Logical Operators section below.

Why Use Binary?

There are an infinite number of ways to represent numbers. Since ancient times, people have developed different notations, such as Roman numerals and Egyptian hieroglyphs. Most modern civilizations use positional notation, which is efficient, flexible, and well suited for doing arithmetic.

A notable feature of any positional system is its base, which represents the number of digits available. People naturally favor the base-ten numeral system, also known as the decimal system, because it plays nicely with counting on fingers.

Computers, on the other hand, treat data as a bunch of numbers expressed in the base-two numeral system, more commonly known as the binary system. Such numbers are composed of only two digits, zero and one.

Note: In math books, the base of a numeric literal is commonly denoted with a subscript that appears slightly below the baseline, such as 4210.

For example, the binary number 100111002 is equivalent to 15610 in the base-ten system. Because there are ten numerals in the decimal system—zero through nine—it usually takes fewer digits to write the same number in base ten than in base two.

Note: You can’t tell a numeral system just by looking at a given number’s digits.

For example, the decimal number 10110 happens to use only binary digits. But it represents a completely different value than its binary counterpart, 1012, which is equivalent to 510.

The binary system requires more storage space than the decimal system but is much less complicated to implement in hardware. While you need more building blocks, they’re easier to make, and there are fewer types of them. That’s like breaking down your code into more modular and reusable pieces.

More importantly, however, the binary system is perfect for electronic devices, which translate digits into different voltage levels. Because voltage likes to drift up and down due to various kinds of noise, you want to keep sufficient distance between consecutive voltages. Otherwise, the signal might end up distorted.

By employing only two states, you make the system more reliable and resistant to noise. Alternatively, you could jack up the voltage, but that would also increase the power consumption, which you definitely want to avoid.

How Does Binary Work?

Imagine for a moment that you had only two fingers to count on. You could count a zero, a one, and a two. But when you ran out of fingers, you’d need to note how many times you had already counted to two and then start over until you reached two again:

| Decimal | Fingers | Eights | Fours | Twos | Ones | Binary |

|---|---|---|---|---|---|---|

| 010 | ✊ | 0 | 0 | 0 | 0 | 02 |

| 110 | ☝️ | 0 | 0 | 0 | 1 | 12 |

| 210 | ✌️ | 0 | 0 | 1 | 0 | 102 |

| 310 | ✌️+☝️ | 0 | 0 | 1 | 1 | 112 |

| 410 | ✌️✌️ | 0 | 1 | 0 | 0 | 1002 |

| 510 | ✌️✌️+☝️ | 0 | 1 | 0 | 1 | 1012 |

| 610 | ✌️✌️+✌️ | 0 | 1 | 1 | 0 | 1102 |

| 710 | ✌️✌️+✌️+☝️ | 0 | 1 | 1 | 1 | 1112 |

| 810 | ✌️✌️✌️✌️ | 1 | 0 | 0 | 0 | 10002 |

| 910 | ✌️✌️✌️✌️+☝️ | 1 | 0 | 0 | 1 | 10012 |

| 1010 | ✌️✌️✌️✌️+✌️ | 1 | 0 | 1 | 0 | 10102 |

| 1110 | ✌️✌️✌️✌️+✌️+☝️ | 1 | 0 | 1 | 1 | 10112 |

| 1210 | ✌️✌️✌️✌️+✌️✌️ | 1 | 1 | 0 | 0 | 11002 |

| 1310 | ✌️✌️✌️✌️+✌️✌️+☝️ | 1 | 1 | 0 | 1 | 11012 |

Every time you wrote down another pair of fingers, you’d also need to group them by powers of two, which is the base of the system. For example, to count up to thirteen, you would have to use both of your fingers six times and then use one more finger. Your fingers could be arranged as one eight, one four, and one one.

These powers of two correspond to digit positions in a binary number and tell you exactly which bits to switch on. They grow right to left, starting at the least-significant bit, which determines if the number is even or odd.

Positional notation is like the odometer in your car: Once a digit in a particular position reaches its maximum value, which is one in the binary system, it rolls over to zero and the one carries over to the left. This can have a cascading effect if there are already some ones to the left of the digit.

How Computers Use Binary

Now that you know the basic principles of the binary system and why computers use it, you’re ready to learn how they represent data with it.

Before any piece of information can be reproduced in digital form, you have to break it down into numbers and then convert them to the binary system. For example, plain text can be thought of as a string of characters. You could assign an arbitrary number to each character or pick an existing character encoding such as ASCII, ISO-8859-1, or UTF-8.

In Python, strings are represented as arrays of Unicode code points. To reveal their ordinal values, call ord() on each of the characters:

>>> [ord(character) for character in "€uro"]

[8364, 117, 114, 111]

The resulting numbers uniquely identify the text characters within the Unicode space, but they’re shown in decimal form. You want to rewrite them using binary digits:

| Character | Decimal Code Point | Binary Code Point |

|---|---|---|

| € | 836410 | 100000101011002 |

| u | 11710 | 11101012 |

| r | 11410 | 11100102 |

| o | 11110 | 11011112 |

Notice that bit-length, which is the number of binary digits, varies greatly across the characters. The euro sign (€) requires fourteen bits, while the rest of the characters can comfortably fit on seven bits.

Note: Here’s how you can check the bit-length of any integer number in Python:

>>> (42).bit_length()

6

Without a pair of parentheses around the number, it would be treated as a floating-point literal with a decimal point.

Variable bit-lengths are problematic. If you were to put those binary numbers next to one another on an optical disc, for example, then you’d end up with a long stream of bits without clear boundaries between the characters:

100000101011001110101111001011011112

One way of knowing how to interpret this information is to designate fixed-length bit patterns for all characters. In modern computing, the smallest unit of information, called an octet or a byte, comprises eight bits that can store 256 distinct values.

You can pad your binary code points with leading zeros to express them in terms of bytes:

| Character | Decimal Code Point | Binary Code Point |

|---|---|---|

| € | 836410 | 00100000 101011002 |

| u | 11710 | 00000000 011101012 |

| r | 11410 | 00000000 011100102 |

| o | 11110 | 00000000 011011112 |

Now each character takes two bytes, or 16 bits. In total, your original text almost doubled in size, but at least it’s encoded reliably.

You can use Huffman coding to find unambiguous bit patterns for every character in a particular text or use a more suitable character encoding. For example, to save space, UTF-8 intentionally favors Latin letters over symbols that you’re less likely to find in an English text:

>>> len("€uro".encode("utf-8"))

6

Encoded according to the UTF-8 standard, the entire text takes six bytes. Since UTF-8 is a superset of ASCII, the letters u, r, and o occupy one byte each, whereas the euro symbol takes three bytes in this encoding:

>>> for char in "€uro":

... print(char, len(char.encode("utf-8")))

...

€ 3

u 1

r 1

o 1

Other types of information can be digitized similarly to text. Raster images are made of pixels, with every pixel having channels that represent color intensities as numbers. Sound waveforms contain numbers corresponding to air pressure at a given sampling interval. Three-dimensional models are built from geometric shapes defined by their vertices, and so forth.

At the end of the day, everything is a number.

Bitwise Logical Operators

You can use bitwise operators to perform Boolean logic on individual bits. That’s analogous to using logical operators such as and, or, and not, but on a bit level. The similarities between bitwise and logical operators go beyond that.

It’s possible to evaluate Boolean expressions with bitwise operators instead of logical operators, but such overuse is generally discouraged. If you’re interested in the details, then you can expand the box below to find out more.

The ordinary way of specifying compound Boolean expressions in Python is to use the logical operators that connect adjacent predicates, like this:

if age >= 18 and not is_self_excluded:

print("You can gamble")

Here, you check if the user is at least eighteen years old and if they haven’t opted out of gambling. You can rewrite that condition using bitwise operators:

if age >= 18 & ~is_self_excluded:

print("You can gamble")

Although this expression is syntactically correct, there are a few problems with it. First, it’s arguably less readable. Second, it doesn’t work as expected for all groups of data. You can demonstrate that by choosing specific operand values:

>>> age = 18

>>> is_self_excluded = True

>>> age >= 18 & ~is_self_excluded # Bitwise logical operators

True

>>> age >= 18 and not is_self_excluded # Logical operators

False

The expression made of the bitwise operators evaluates to True, while the same expression built from the logical operators evaluates to False. That’s because bitwise operators take precedence over the comparison operators, changing how the whole expression is interpreted:

>>> age >= (18 & ~is_self_excluded)

True

It’s as if someone put implicit parentheses around the wrong operands. To fix this, you can put explicit parentheses, which will enforce the correct order of evaluation:

>>> (age >= 18) & ~is_self_excluded

0

However, you no longer get a Boolean result. Python bitwise operators were designed primarily to work with integers, so their operands automatically get casted if needed. This may not always be possible, though.

While you can use truthy and falsy integers in a Boolean context, it’s a known antipattern that can cost you long hours of unnecessary debugging. You’re better off following the Zen of Python to save yourself the trouble.

Last but not least, you may deliberately want to use bitwise operators to disable the short-circuit evaluation of Boolean expressions. Expressions using logical operators are evaluated lazily from left to right. In other words, the evaluation stops as soon as the result of the entire expression is known:

>>> def call(x):

... print(f"call({x=})")

... return x

...

>>> call(False) or call(True) # Both operands evaluated

call(x=False)

call(x=True)

True

>>> call(True) or call(False) # Only the left operand evaluated

call(x=True)

True

In the second example, the right operand isn’t called at all because the value of the entire expression has already been determined by the value of the left operand. No matter what the right operand is, it won’t affect the result, so there’s no point in calling it unless you rely on side effects.

There are idioms, such as falling back to a default value, that take advantage of this peculiarity:

>>> (1 + 1) or "default" # The left operand is truthy

2

>>> (1 - 1) or "default" # The left operand is falsy

'default'

A Boolean expression takes the value of the last evaluated operand. The operand becomes truthy or falsy inside the expression but retains its original type and value afterward. In particular, a positive integer on the left gets propagated, while a zero gets discarded.

Unlike their logical counterparts, bitwise operators are evaluated eagerly:

>>> call(True) | call(False)

call(x=True)

call(x=False)

True

Even though knowing the left operand is sufficient to determine the value of the entire expression, all operands are always evaluated unconditionally.

Unless you have a strong reason and know what you’re doing, you should use bitwise operators only for controlling bits. It’s too easy to get it wrong otherwise. In most cases, you’ll want to pass integers as arguments to the bitwise operators.

Bitwise AND

The bitwise AND operator (&) performs logical conjunction on the corresponding bits of its operands. For each pair of bits occupying the same position in the two numbers, it returns a one only when both bits are switched on:

The resulting bit pattern is an intersection of the operator’s arguments. It has two bits turned on in the positions where both operands are ones. In all other places, at least one of the inputs has a zero bit.

Arithmetically, this is equivalent to a product of two bit values. You can calculate the bitwise AND of numbers a and b by multiplying their bits at every index i:

Here’s a concrete example:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

100111002 | 15610 |

b |

1101002 | 5210 |

a & b |

101002 | 2010 |

A one multiplied by one gives one, but anything multiplied by zero will always result in zero. Alternatively, you can take the minimum of the two bits in each pair. Notice that when operands have unequal bit-lengths, the shorter one is automatically padded with zeros to the left.

Bitwise OR

The bitwise OR operator (|) performs logical disjunction. For each corresponding pair of bits, it returns a one if at least one of them is switched on:

The resulting bit pattern is a union of the operator’s arguments. It has five bits turned on where either of the operands has a one. Only a combination of two zeros gives a zero in the final output.

The arithmetic behind it is a combination of a sum and a product of the bit values. To calculate the bitwise OR of numbers a and b, you need to apply the following formula to their bits at every index i:

Here’s a tangible example:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

100111002 | 15610 |

b |

1101002 | 5210 |

a | b |

101111002 | 18810 |

It’s almost like a sum of two bits but clamped at the higher end so that it never exceeds the value of one. You could also take the maximum of the two bits in each pair to get the same result.

Bitwise XOR

Unlike bitwise AND, OR, and NOT, the bitwise XOR operator (^) doesn’t have a logical counterpart in Python. However, you can simulate it by building on top of the existing operators:

def xor(a, b):

return (a and not b) or (not a and b)

It evaluates two mutually exclusive conditions and tells you whether exactly one of them is met. For example, a person can be either a minor or an adult, but not both at the same time. Conversely, it’s not possible for a person to be neither a minor nor an adult. The choice is mandatory.

The name XOR stands for “exclusive or” since it performs exclusive disjunction on the bit pairs. In other words, every bit pair must contain opposing bit values to produce a one:

Visually, it’s a symmetric difference of the operator’s arguments. There are three bits switched on in the result where both numbers have different bit values. Bits in the remaining positions cancel out because they’re the same.

Similarly to the bitwise OR operator, the arithmetic of XOR involves a sum. However, while the bitwise OR clamps values at one, the XOR operator wraps them around with a sum modulo two:

Modulo is a function of two numbers—the dividend and the divisor—that performs a division and returns its remainder. In Python, there’s a built-in modulo operator denoted with the percent sign (%).

Once again, you can confirm the formula by looking at an example:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

100111002 | 15610 |

b |

1101002 | 5210 |

a ^ b |

101010002 | 16810 |

The sum of two zeros or two ones yields a whole number when divided by two, so the result has a remainder of zero. However, when you divide the sum of two different bit values by two, you get a fraction with a remainder of one. A more straightforward formula for the XOR operator is the difference between the maximum and the minimum of both bits in each pair.

Bitwise NOT

The last of the bitwise logical operators is the bitwise NOT operator (~), which expects just one argument, making it the only unary bitwise operator. It performs logical negation on a given number by flipping all of its bits:

The inverted bits are a complement to one, which turns zeros into ones and ones into zeros. It can be expressed arithmetically as the subtraction of individual bit values from one:

Here’s an example showing one of the numbers used before:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

100111002 | 15610 |

~a |

11000112 | 9910 |

While the bitwise NOT operator seems to be the most straightforward of them all, you need to exercise extreme caution when using it in Python. Everything you’ve read so far is based on the assumption that numbers are represented with unsigned integers.

Note: Unsigned data types don’t let you store negative numbers such as -273 because there’s no space for a sign in a regular bit pattern. Trying to do so would result in a compilation error, a runtime exception, or an integer overflow depending on the language used.

Although there are ways to simulate unsigned integers, Python doesn’t support them natively. That means all numbers have an implicit sign attached to them whether you specify one or not. This shows when you do a bitwise NOT of any number:

>>> ~156

-157

Instead of the expected 9910, you get a negative value! The reason for this will become clear once you learn about the various binary number representations. For now, the quick-fix solution is to take advantage of the bitwise AND operator:

>>> ~156 & 255

99

That’s a perfect example of a bitmask, which you’ll explore in one of the upcoming sections.

Bitwise Shift Operators

Bitwise shift operators are another kind of tool for bit manipulation. They let you move the bits around, which will be handy for creating bitmasks later on. In the past, they were often used to improve the speed of certain mathematical operations.

Left Shift

The bitwise left shift operator (<<) moves the bits of its first operand to the left by the number of places specified in its second operand. It also takes care of inserting enough zero bits to fill the gap that arises on the right edge of the new bit pattern:

Shifting a single bit to the left by one place doubles its value. For example, instead of a two, the bit will indicate a four after the shift. Moving it two places to the left will quadruple the resulting value. When you add up all the bits in a given number, you’ll notice that it also gets doubled with every place shifted:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

1001112 | 3910 |

a << 1 |

10011102 | 7810 |

a << 2 |

100111002 | 15610 |

a << 3 |

1001110002 | 31210 |

In general, shifting bits to the left corresponds to multiplying the number by a power of two, with an exponent equal to the number of places shifted:

The left shift used to be a popular optimization technique because bit shifting is a single instruction and is cheaper to calculate than exponent or product. Today, however, compilers and interpreters, including Python’s, are quite capable of optimizing your code behind the scenes.

Note: Don’t use the bit shift operators as a means of premature optimization in Python. You won’t see a difference in execution speed, but you’ll most definitely make your code less readable.

On paper, the bit pattern resulting from a left shift becomes longer by as many places as you shift it. That’s also true for Python in general because of how it handles integers. However, in most practical cases, you’ll want to constrain the length of a bit pattern to be a multiple of eight, which is the standard byte length.

For example, if you’re working with a single byte, then shifting it to the left should discard all the bits that go beyond its left boundary:

It’s sort of like looking at an unbounded stream of bits through a fixed-length window. There are a few tricks that let you do this in Python. For example, you can apply a bitmask with the bitwise AND operator:

>>> 39 << 3

312

>>> (39 << 3) & 255

56

Shifting 3910 by three places to the left returns a number higher than the maximum value that you can store on a single byte. It takes nine bits, whereas a byte has only eight. To chop off that one extra bit on the left, you can apply a bitmask with the appropriate value. If you’d like to keep more or fewer bits, then you’ll need to modify the mask value accordingly.

Right Shift

The bitwise right shift operator (>>) is analogous to the left one, but instead of moving bits to the left, it pushes them to the right by the specified number of places. The rightmost bits always get dropped:

Every time you shift a bit to the right by one position, you halve its underlying value. Moving the same bit by two places to the right produces a quarter of the original value, and so on. When you add up all the individual bits, you’ll see that the same rule applies to the number they represent:

| Expression | Binary Value | Decimal Value |

|---|---|---|

a |

100111012 | 15710 |

a >> 1 |

10011102 | 7810 |

a >> 2 |

1001112 | 3910 |

a >> 3 |

100112 | 1910 |

Halving an odd number such as 15710 would produce a fraction. To get rid of it, the right shift operator automatically floors the result. It’s virtually the same as a floor division by a power of two:

Again, the exponent corresponds to the number of places shifted to the right. In Python, you can leverage a dedicated operator to perform a floor division:

>>> 5 >> 1 # Bitwise right shift

2

>>> 5 // 2 # Floor division (integer division)

2

>>> 5 / 2 # Floating-point division

2.5

The bitwise right shift operator and the floor division operator both work the same way, even for negative numbers. However, the floor division lets you choose any divisor and not just a power of two. Using the bitwise right shift was a common way of improving the performance of some arithmetic divisions.

Note: You might be wondering what happens when you run out of bits to shift. For example, when you try pushing by more places than there are bits in a number:

>>> 2 >> 5

0

Once there are no more bits switched on, you’re stuck with a value of zero. Zero divided by anything will always return zero. However, things get trickier when you right shift a negative number because the implicit sign bit gets in the way:

>>> -2 >> 5

-1

The rule of thumb is that, regardless of the sign, the result will be the same as a floor division by some power of two. The floor of a small negative fraction is always minus one, and that’s what you’ll get. Read on for a more detailed explanation.

Just like with the left shift operator, the bit pattern changes its size after a right shift. While moving bits to the right makes the binary sequence shorter, it usually won’t matter because you can put as many zeros in front of a bit sequence as you like without changing the value. For example, 1012 is the same as 01012, and so is 000001012, provided that you’re dealing with nonnegative numbers.

Sometimes you’ll want to keep a given bit-length after doing a right shift to align it against another value or to fit in somewhere. You can do that by applying a bitmask:

It carves out only those bits you’re interested in and fills the bit pattern with leading zeros if necessary.

The handling of negative numbers in Python is slightly different from the traditional approach to bitwise shifting. In the next section, you’ll examine this in more detail.

Arithmetic vs Logical Shift

You can further categorize the bitwise shift operators as arithmetic and logical shift operators. While Python only lets you do the arithmetic shift, it’s worthwhile to know how other programming languages implement the bitwise shift operators to avoid confusion and surprises.

This distinction comes from the way they handle the sign bit, which ordinarily lies at the far left edge of a signed binary sequence. In practice, it’s relevant only to the right shift operator, which can cause a number to flip its sign, leading to integer overflow.

Note: Java and JavaScript, for example, distinguish the logical right shift operator with an additional greater-than sign (>>>). Since the left shift operator behaves consistently across both kinds of shifts, these languages don’t define a logical left shift counterpart.

Conventionally, a turned-on sign bit indicates negative numbers, which helps keep the arithmetic properties of a binary sequence:

| Decimal Value | Signed Binary Value | Sign Bit | Sign | Meaning |

|---|---|---|---|---|

| -10010 | 100111002 | 1 | - | Negative number |

| 2810 | 000111002 | 0 | + | Positive number or zero |

Looking from the left at these two binary sequences, you can see that their first bit carries the sign information, while the remaining part consists of the magnitude bits, which are the same for both numbers.

Note: The specific decimal values will depend on how you decide to express signed numbers in binary. It varies between languages and gets even more complicated in Python, so you can ignore it for the moment. You’ll have a better picture once you get to the binary number representations section below.

A logical right shift, also known as an unsigned right shift or a zero-fill right shift, moves the entire binary sequence, including the sign bit, and fills the resulting gap on the left with zeros:

Notice how the information about the sign of the number is lost. Regardless of the original sign, it’ll always produce a nonnegative integer because the sign bit gets replaced by zero. As long as you aren’t interested in the numeric values, a logical right shift can be useful in processing low-level binary data.

However, because signed binary numbers are typically stored on a fixed-length bit sequence in most languages, it can make the result wrap around the extreme values. You can see this in an interactive Java Shell tool:

jshell> -100 >>> 1

$1 ==> 2147483598

The resulting number changes its sign from negative to positive, but it also overflows, ending up very close to Java’s maximum integer:

jshell> Integer.MAX_VALUE

$2 ==> 2147483647

This number may seem arbitrary at first glance, but it’s directly related to the number of bits that Java allocates for the Integer data type:

jshell> Integer.toBinaryString(-100)

$3 ==> "11111111111111111111111110011100"

It uses 32 bits to store signed integers in two’s complement representation. When you take the sign bit out, you’re left with 31 bits, whose maximum decimal value is equal to 231 - 1, or 214748364710.

Python, on the other hand, stores integers as if there were an infinite number of bits at your disposal. Consequently, a logical right shift operator wouldn’t be well defined in pure Python, so it’s missing from the language. You can still simulate it, though.

One way of doing so is to take advantage of the unsigned data types available in C that are exposed through the built-in ctypes module:

>>> from ctypes import c_uint32 as unsigned_int32

>>> unsigned_int32(-100).value >> 1

2147483598

They let you pass in a negative number but don’t attach any special meaning to the sign bit. It’s treated like the rest of the magnitude bits.

While there are only a few predefined unsigned integer types in C, which differ in bit-length, you can create a custom function in Python to handle arbitrary bit-lengths:

>>> def logical_rshift(signed_integer, places, num_bits=32):

... unsigned_integer = signed_integer % (1 << num_bits)

... return unsigned_integer >> places

...

>>> logical_rshift(-100, 1)

2147483598

This converts a signed bit sequence to an unsigned one and then performs the regular arithmetic right shift.

However, since bit sequences in Python aren’t fixed in length, they don’t really have a sign bit. Moreover, they don’t use the traditional two’s complement representation like in C or Java. To mitigate that, you can take advantage of the modulo operation, which will keep the original bit patterns for positive integers while appropriately wrapping around the negative ones.

An arithmetic right shift (>>), sometimes called the signed right shift operator, maintains the sign of a number by replicating its sign bit before moving bits to the right:

In other words, it fills the gap on the left with whatever the sign bit was. Combined with the two’s complement representation of signed binary, this results in an arithmetically correct value. Regardless of whether the number is positive or negative, an arithmetic right shift is equivalent to floor division.

As you’re about to find out, Python doesn’t always store integers in plain two’s complement binary. Instead, it follows a custom adaptive strategy that works like sign-magnitude with an unlimited number of bits. It converts numbers back and forth between their internal representation and two’s complement to mimic the standard behavior of the arithmetic shift.

Binary Number Representations

You’ve experienced firsthand the lack of unsigned data types in Python when using the bitwise negation (~) and the right shift operator (>>). You’ve seen hints about the unusual approach to storing integers in Python, which makes handling negative numbers tricky. To use bitwise operators effectively, you need to know about the various representations of numbers in binary.

Unsigned Integers

In programming languages like C, you choose whether to use the signed or unsigned flavor of a given numeric type. Unsigned data types are more suitable when you know for sure that you’ll never need to deal with negative numbers. By allocating that one extra bit, which would otherwise serve as a sign bit, you practically double the range of available values.

It also makes things a little safer by increasing the maximum limit before an overflow happens. However, overflows happen only with fixed bit-lengths, so they’re irrelevant to Python, which doesn’t have such constraints.

The quickest way to get a taste of the unsigned numeric types in Python is to use the previously mentioned ctypes module:

>>> from ctypes import c_uint8 as unsigned_byte

>>> unsigned_byte(-42).value

214

Since there’s no sign bit in such integers, all their bits represent the magnitude of a number. Passing a negative number forces Python to reinterpret the bit pattern as if it had only the magnitude bits.

Signed Integers

The sign of a number has only two states. If you ignore zero for a moment, then it can be either positive or negative, which translates nicely to the binary system. Yet there are a few alternative ways to represent signed integers in binary, each with its own pros and cons.

Probably the most straightforward one is the sign-magnitude, which builds naturally on top of unsigned integers. When a binary sequence is interpreted as sign-magnitude, the most significant bit plays the role of a sign bit, while the rest of the bits work the same as usual:

| Binary Sequence | Sign-Magnitude Value | Unsigned Value |

|---|---|---|

| 001010102 | 4210 | 4210 |

| 101010102 | -4210 | 17010 |

A zero on the leftmost bit indicates a positive (+) number, and a one indicates a negative (-) number. Notice that a sign bit doesn’t contribute to the number’s absolute value in sign-magnitude representation. It’s there only to let you flip the sign of the remaining bits.

Why the leftmost bit?

It keeps bit indexing intact, which, in turn, helps maintain backward compatibility of the bit weights used to calculate the decimal value of a binary sequence. However, not everything about sign-magnitude is so great.

Note: Binary representations of signed integers only make sense on fixed-length bit sequences. Otherwise, you couldn’t tell where the sign bit was. In Python, however, you can represent integers with as many bits as you like:

>>> f"{-5 & 0b1111:04b}"

'1011'

>>> f"{-5 & 0b11111111:08b}"

'11111011'

Whether it’s four bits or eight, the sign bit will always be found in the leftmost position.

The range of values that you can store in a sign-magnitude bit pattern is symmetrical. But it also means that you end up with two ways to convey zero:

| Binary Sequence | Sign-Magnitude Value | Unsigned Value |

|---|---|---|

| 000000002 | +010 | 010 |

| 100000002 | -010 | 12810 |

Zero doesn’t technically have a sign, but there’s no way not to include one in sign-magnitude. While having an ambiguous zero isn’t ideal in most cases, it’s not the worst part of the story. The biggest downside of this method is cumbersome binary arithmetic.

When you apply standard binary arithmetic to numbers stored in sign-magnitude, it may not give you the expected results. For example, adding two numbers with the same magnitude but opposite signs won’t make them cancel out:

| Expression | Binary Sequence | Sign-Magnitude Value |

|---|---|---|

a |

001010102 | 4210 |

b |

101010102 | -4210 |

a + b |

110101002 | -8410 |

The sum of 42 and -42 doesn’t produce zero. Also, the carryover bit can sometimes propagate from magnitude to the sign bit, inverting the sign and yielding an unexpected result.

To address these problems, some of the early computers employed one’s complement representation. The idea was to change how decimal numbers are mapped to particular binary sequences so that they can be added up correctly. For a deeper dive into one’s complement, you can expand the section below.

In one’s complement, positive numbers are the same as in sign-magnitude, but negative numbers are obtained by flipping the positive number’s bits using a bitwise NOT:

| Positive Sequence | Negative Sequence | Magnitude Value |

|---|---|---|

| 000000002 | 111111112 | ±010 |

| 000000012 | 111111102 | ±110 |

| 000000102 | 111111012 | ±210 |

| ⋮ | ⋮ | ⋮ |

| 011111112 | 100000002 | ±12710 |

This preserves the original meaning of the sign bit, so positive numbers still begin with a binary zero, while negative ones start with a binary one. Likewise, the range of values remains symmetrical and continues to have two ways to represent zero. However, the binary sequences of negative numbers in one’s complement are arranged in reverse order as compared to sign-magnitude:

| One’s Complement | Sign-Magnitude | Decimal Value |

|---|---|---|

| 111111112 | 100000002 | -010 |

| 111111102 | 100000012 | -110 |

| 111111012 | 100000102 | -210 |

| ↓ | ↑ | ⋮ |

| 100000102 | 111111012 | -12510 |

| 100000012 | 111111102 | -12610 |

| 100000002 | 111111112 | -12710 |

Thanks to that, you can now add two numbers more reliably because the sign bit doesn’t need special treatment. If a carryover originates from the sign bit, it’s fed back at the right edge of the binary sequence instead of just being dropped. This ensures the correct result.

Nevertheless, modern computers don’t use one’s complement to represent integers because there’s an even better way called two’s complement. By applying a small modification, you can eliminate double zero and simplify the binary arithmetic in one go. To explore two’s complement in more detail, you can expand the section below.

When finding bit sequences of negative values in two’s complement, the trick is to add one to the result after negating the bits:

| Positive Sequence | One’s Complement (NOT) | Two’s Complement (NOT+1) |

|---|---|---|

| 000000002 | 111111112 | 000000002 |

| 000000012 | 111111102 | 111111112 |

| 000000102 | 111111012 | 111111102 |

| ⋮ | ⋮ | ⋮ |

| 011111112 | 100000002 | 100000012 |

This pushes the bit sequences of negative numbers down by one place, eliminating the notorious minus zero. A more useful minus one will take over its bit pattern instead.

As a side effect, the range of available values in two’s complement becomes asymmetrical, with a lower bound that’s a power of two and an odd upper bound. For example, an 8-bit signed integer will let you store numbers from -12810 to 12710 in two’s complement:

| Two’s Complement | One’s Complement | Decimal Value |

|---|---|---|

| 100000002 | N/A | -12810 |

| 100000012 | 100000002 | -12710 |

| 100000102 | 100000012 | -12610 |

| ⋮ | ⋮ | ⋮ |

| 111111102 | 111111012 | -210 |

| 111111112 | 111111102 | -110 |

| N/A | 111111112 | -010 |

| 000000002 | 000000002 | 010 |

| 000000012 | 000000012 | 110 |

| 000000102 | 000000102 | 210 |

| ⋮ | ⋮ | ⋮ |

| 011111112 | 011111112 | 12710 |

Another way to put it is that the most significant bit carries both the sign and part of the number magnitude:

| Bit 7 | Bit 6 | Bit 5 | Bit 4 | Bit 3 | Bit 2 | Bit 1 | Bit 0 |

|---|---|---|---|---|---|---|---|

| -27 | 26 | 25 | 24 | 23 | 22 | 21 | 20 |

| -128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

Notice the minus sign next to the leftmost bit weight. Deriving a decimal value from a binary sequence like that is only a matter of adding appropriate columns. For example, the value of 110101102 in 8-bit two’s complement representation is the same as the sum: -12810 + 6410 + 1610 + 410 + 210 = -4210.

With the two’s complement representation, you no longer need to worry about the carryover bit unless you want to use it as an overflow detection mechanism, which is kind of neat.

There are a few other variants of signed number representations, but they’re not as popular.

Floating-Point Numbers

The IEEE 754 standard defines a binary representation for real numbers consisting of the sign, exponent, and mantissa bits. Without getting into too many technical details, you can think of it as the scientific notation for binary numbers. The decimal point “floats” around to accommodate a varying number of significant figures, except it’s a binary point.

Two data types conforming to that standard are widely supported:

- Single precision: 1 sign bit, 8 exponent bits, 23 mantissa bits

- Double precision: 1 sign bit, 11 exponent bits, 52 mantissa bits

Python’s float data type is equivalent to the double-precision type. Note that some applications require more or fewer bits. For example, the OpenEXR image format takes advantage of half precision to represent pixels with a high dynamic range of colors at a reasonable file size.

The number Pi (π) has the following binary representation in single precision when rounded to five decimal places:

| Sign | Exponent | Mantissa |

|---|---|---|

| 02 | 100000002 | .100100100001111110100002 |

The sign bit works just like with integers, so zero denotes a positive number. For the exponent and mantissa, however, different rules can apply depending on a few edge cases.

First, you need to convert them from binary to the decimal form:

- Exponent: 12810

- Mantissa: 2-1 + 2-4 + … + 2-19 = 29926110/52428810 ≈ 0.57079510

The exponent is stored as an unsigned integer, but to account for negative values, it usually has a bias equal to 12710 in single precision. You need to subtract it to recover the actual exponent.

Mantissa bits represent a fraction, so they correspond to negative powers of two. Additionally, you need to add one to the mantissa because it assumes an implicit leading bit before the radix point in this particular case.

Putting it all together, you arrive at the following formula to convert a floating-point binary number into a decimal one:

When you substitute the variables for the actual values in the example above, you’ll be able to decipher the bit pattern of a floating-point number stored in single precision:

There it is, granted that Pi has been rounded to five decimal places. You’ll learn how to display such numbers in binary later on.

Fixed-Point Numbers

While floating-point numbers are a good fit for engineering purposes, they fail in monetary calculations due to their limited precision. For example, some numbers with a finite representation in decimal notation have only an infinite representation in binary. That often results in a rounding error, which can accumulate over time:

>>> 0.1 + 0.2

0.30000000000000004

In such cases, you’re better off using Python’s decimal module, which implements fixed-point arithmetic and lets you specify where to put the decimal point on a given bit-length. For example, you can tell it how many digits you want to preserve:

>>> from decimal import Decimal, localcontext

>>> with localcontext() as context:

... context.prec = 5 # Number of digits

... print(Decimal("123.456") * 1)

...

123.46

However, it includes all digits, not just the fractional ones.

Note: If you’re working with rational numbers, then you might be interested in checking out the fractions module, which is part of Python’s standard library.

If you can’t or don’t want to use a fixed-point data type, a straightforward way to reliably store currency values is to scale the amounts to the smallest unit, such as cents, and represent them with integers.

Integers in Python

In the old days of programming, computer memory was at a premium. Therefore, languages would give you pretty granular control over how many bytes to allocate for your data. Let’s take a quick peek at a few integer types from C as an example:

| Type | Size | Minimum Value | Maximum Value |

|---|---|---|---|

char |

1 byte | -128 | 127 |

short |

2 bytes | -32,768 | 32,767 |

int |

4 bytes | -2,147,483,648 | 2,147,483,647 |

long |

8 bytes | -9,223,372,036,854,775,808 | 9,223,372,036,854,775,807 |

These values might vary from platform to platform. However, such an abundance of numeric types allows you to arrange data in memory compactly. Remember that these don’t even include unsigned types!

On the other end of the spectrum are languages such as JavaScript, which have just one numeric type to rule them all. While this is less confusing for beginning programmers, it comes at the price of increased memory consumption, reduced processing efficiency, and decreased precision.

When talking about bitwise operators, it’s essential to understand how Python handles integer numbers. After all, you’ll use these operators mainly to work with integers. There are a couple of wildly different representations of integers in Python that depend on their values.

Interned Integers

In CPython, very small integers between -510 and 25610 are interned in a global cache to gain some performance because numbers in that range are commonly used. In practice, whenever you refer to one of those values, which are singletons created at the interpreter startup, Python will always provide the same instance:

>>> a = 256

>>> b = 256

>>> a is b

True

>>> print(id(a), id(b), sep="\n")

94050914330336

94050914330336

Both variables have the same identity because they refer to the exact same object in memory. That’s typical of reference types but not immutable values such as integers. However, when you go beyond that range of cached values, Python will start creating distinct copies during variable assignment:

>>> a = 257

>>> b = 257

>>> a is b

False

>>> print(id(a), id(b), sep="\n")

140282370939376

140282370939120

Despite having equal values, these variables point to separate objects now. But don’t let that fool you. Python will occasionally jump in and optimize your code behind the scenes. For example, it’ll cache a number that occurs on the same line multiple times regardless of its value:

>>> a = 257

>>> b = 257

>>> print(id(a), id(b), sep="\n")

140258768039856

140258768039728

>>> print(id(257), id(257), sep="\n")

140258768039760

140258768039760

Variables a and b are independent objects because they reside at different memory locations, while the numbers used literally in print() are, in fact, the same object.

Note: Interning is an implementation detail of the CPython interpreter, which might change in future versions, so don’t rely on it in your programs.

Interestingly, there’s a similar string interning mechanism in Python, which kicks in for short texts comprised of ASCII letters only. It helps speed up dictionary lookups by allowing their keys to be compared by memory addresses, or C pointers, instead of by the individual string characters.

Fixed-Precision Integers

Integers that you’re most likely to find in Python will leverage the C signed long data type. They use the classic two’s complement binary representation on a fixed number of bits. The exact bit-length will depend on your hardware platform, operating system, and Python interpreter version.

Modern computers typically use 64-bit architecture, so this would translate to decimal numbers between -263 and 263 - 1. You can check the maximum value of a fixed-precision integer in Python in the following way:

>>> import sys

>>> sys.maxsize

9223372036854775807

It’s huge! Roughly 9 million times the number of stars in our galaxy, so it should suffice for everyday use. While the maximum value that you could squeeze out of the unsigned long type in C is even bigger, on the order of 1019, integers in Python have no theoretical limit. To allow this, numbers that don’t fit on a fixed-length bit sequence are stored differently in memory.

Arbitrary-Precision Integers

Do you remember that popular K-pop song “Gangnam Style” that became a worldwide hit in 2012? The YouTube video was the first to break a billion views. Soon after that, so many people had watched the video that it made the view counter overflow. YouTube had no choice but to upgrade their counter from 32-bit signed integers to 64-bit ones.

That might give plenty of headroom for a view counter, but there are even bigger numbers that aren’t uncommon in real life, notably in the scientific world. Nonetheless, Python can deal with them effortlessly:

>>> from math import factorial

>>> factorial(42)

1405006117752879898543142606244511569936384000000000

This number has fifty-two decimal digits. It would take at least 170 bits to represent it in binary with the traditional approach:

>>> factorial(42).bit_length()

170

Since they’re well over the limits that any of the C types have to offer, such astronomical numbers are converted into a sign-magnitude positional system, whose base is 230. Yes, you read that correctly. Whereas you have ten fingers, Python has over a billion!

Again, this may vary depending on the platform you’re currently using. When in doubt, you can double-check:

>>> import sys

>>> sys.int_info

sys.int_info(bits_per_digit=30, sizeof_digit=4)

This will tell you how many bits are used per digit and what the size in bytes is of the underlying C structure. To get the same namedtuple in Python 2, you’d refer to the sys.long_info attribute instead.

While this conversion between fixed- and arbitrary-precision integers is done seamlessly under the hood in Python 3, there was a time when things were more explicit. For more information, you can expand the box below.

In the past, Python explicitly defined two distinct integer types:

- Plain integer

- Long integer

The first one was modeled after the C signed long type, which typically occupied 32 or 64 bits and offered a limited range of values:

>>> # Python 2

>>> import sys

>>> sys.maxint

9223372036854775807

>>> type(sys.maxint)

<type 'int'>

For bigger numbers, you were supposed to use the second type that didn’t come with a limit. Python would automatically promote plain integers to long ones if needed:

>>> # Python 2

>>> import sys

>>> sys.maxint + 1

9223372036854775808L

>>> type(sys.maxint + 1)

<type 'long'>

This feature prevented the integer overflow error. Notice the letter L at the end of a literal, which could be used to enforce the given type by hand:

>>> # Python 2

>>> type(42)

<type 'int'>

>>> type(42L)

<type 'long'>

Eventually, both types were unified so that you wouldn’t have to think about it anymore.

Such a representation eliminates integer overflow errors and gives the illusion of infinite bit-length, but it requires significantly more memory. Additionally, performing bignum arithmetic is slower than with fixed precision because it can’t run directly in hardware without an intermediate layer of emulation.

Another challenge is keeping a consistent behavior of the bitwise operators across alternative integer types, which is crucial in handling the sign bit. Recall that fixed-precision integers in Python use the standard two’s complement representation from C, while large integers use sign-magnitude.

To mitigate that difference, Python will do the necessary binary conversion for you. It might change how a number is represented before and after applying a bitwise operator. Here’s a relevant comment from the CPython source code, which explains this in more detail:

Bitwise operations for negative numbers operate as though on a two’s complement representation. So convert arguments from sign-magnitude to two’s complement, and convert the result back to sign-magnitude at the end. (Source)

In other words, negative numbers are treated as two’s complement bit sequences when you apply the bitwise operators on them, even though the result will be presented to you in sign-magnitude form. There are ways to emulate the sign bit and some of the unsigned types in Python, though.

Bit Strings in Python

You’re welcome to use pen and paper throughout the rest of this article. It may even serve as a great exercise! However, at some point, you’ll want to verify whether your binary sequences or bit strings correspond to the expected numbers in Python. Here’s how.

Converting int to Binary

To reveal the bits making up an integer number in Python, you can print a formatted string literal, which optionally lets you specify the number of leading zeros to display:

>>> print(f"{42:b}") # Print 42 in binary

101010

>>> print(f"{42:032b}") # Print 42 in binary on 32 zero-padded digits

00000000000000000000000000101010

Alternatively, you can call bin() with the number as an argument:

>>> bin(42)

'0b101010'

This global built-in function returns a string consisting of a binary literal, which starts with the prefix 0b and is followed by ones and zeros. It always shows the minimum number of digits without the leading zeros.

You can use such literals verbatim in your code, too:

>>> age = 0b101010

>>> print(age)

42

Other integer literals available in Python are the hexadecimal and octal ones, which you can obtain with the hex() and oct() functions, respectively:

>>> hex(42)

'0x2a'

>>> oct(42)

'0o52'

Notice how the hexadecimal system, which is base sixteen, takes advantage of letters A through F to augment the set of available digits. The octal literals in other programming languages are usually prefixed with plain zero, which might be confusing. Python explicitly forbids such literals to avoid making a mistake:

>>> 052

File "<stdin>", line 1

SyntaxError: leading zeros in decimal integer literals are not permitted;

use an 0o prefix for octal integers

You can express the same value in different ways using any of the mentioned integer literals:

>>> 42 == 0b101010 == 0x2a == 0o52

True

Choose the one that makes the most sense in context. For example, it’s customary to express bitmasks with hexadecimal notation. On the other hand, the octal literal is rarely seen these days.

All numeric literals in Python are case insensitive, so you can prefix them with either lowercase or uppercase letters:

>>> 0b101 == 0B101

True

This also applies to floating-point number literals that use scientific notation as well as complex number literals.

Converting Binary to int

Once you have your bit string ready, you can get its decimal representation by taking advantage of a binary literal:

>>> 0b101010

42

This is a quick way to do the conversion while working inside the interactive Python interpreter. Unfortunately, it won’t let you convert bit sequences synthesized at runtime because all literals need to be hard-coded in the source code.

Note: You might be tempted to evaluate Python code with eval("0b101010"), but that’s an easy way to compromise the security of your program, so don’t do it!

Calling int() with two arguments will work better in the case of dynamically generated bit strings:

>>> int("101010", 2)

42

>>> int("cafe", 16)

51966

The first argument is a string of digits, while the second one determines the base of the numeral system. Unlike a binary literal, a string can come from anywhere, even a user typing on the keyboard. For a deeper look at int(), you can expand the box below.

There are other ways to call int(). For example, it returns zero when called without arguments:

>>> int()

0

This feature makes it a common pattern in the defaultdict collection, which needs a default value provider. Take this as an example:

>>> from collections import defaultdict

>>> word_counts = defaultdict(int)

>>> for word in "A sentence with a message".split():

... word_counts[word.lower()] += 1

...

>>> dict(word_counts)

{'a': 2, 'sentence': 1, 'with': 1, 'message': 1}

Here, int() helps to count words in a sentence. It’s called automatically whenever defaultdict needs to initialize the value of a missing key in the dictionary.

Another popular use of int() is typecasting. For example, when you pass int() a floating-point value, it truncates the value by removing the fractional component:

>>> int(3.14)

3

When you give it a string, it tries to parse out a number from it:

>>> int(input("Enter your age: "))

Enter your age: 42

42

In general, int() will accept an object of any type as long as it defines a special method that can handle the conversion.

So far, so good. But what about negative numbers?

Emulating the Sign Bit

When you call bin() on a negative integer, it merely prepends the minus sign to the bit string obtained from the corresponding positive value:

>>> print(bin(-42), bin(42), sep="\n ")

-0b101010

0b101010

Changing the sign of a number doesn’t affect the underlying bit string in Python. Conversely, you’re allowed to prefix a bit string with the minus sign when transforming it to decimal form:

>>> int("-101010", 2)

-42

That makes sense in Python because, internally, it doesn’t use the sign bit. You can think of the sign of an integer number in Python as a piece of information stored separately from the modulus.

However, there are a few workarounds that let you emulate fixed-length bit sequences containing the sign bit:

- Bitmask

- Modulo operation (

%) ctypesmodulearraymodulestructmodule

You know from earlier sections that to ensure a certain bit-length of a number, you can use a nifty bitmask. For example, to keep one byte, you can use a mask composed of exactly eight turned-on bits:

>>> mask = 0b11111111 # Same as 0xff or 255

>>> bin(-42 & mask)

'0b11010110'

Masking forces Python to temporarily change the number’s representation from sign-magnitude to two’s complement and then back again. If you forget about the decimal value of the resulting binary literal, which is equal to 21410, then it’ll represent -4210 in two’s complement. The leftmost bit will be the sign bit.

Alternatively, you can take advantage of the modulo operation that you used previously to simulate the logical right shift in Python:

>>> bin(-42 % (1 << 8)) # Give me eight bits

'0b11010110'

If that looks too convoluted for your taste, then you can use one of the modules from the standard library that express the same intent more clearly. For example, using ctypes will have an identical effect:

>>> from ctypes import c_uint8 as unsigned_byte

>>> bin(unsigned_byte(-42).value)

'0b11010110'

You’ve seen it before, but just as a reminder, it’ll piggyback off the unsigned integer types from C.

Another standard module that you can use for this kind of conversion in Python is the array module. It defines a data structure that’s similar to a list but is only allowed to hold elements of the same numeric type. When declaring an array, you need to indicate its type up front with a corresponding letter:

>>> from array import array

>>> signed = array("b", [-42, 42])

>>> unsigned = array("B")

>>> unsigned.frombytes(signed.tobytes())

>>> unsigned

array('B', [214, 42])

>>> bin(unsigned[0])

'0b11010110'

>>> bin(unsigned[1])

'0b101010'

For example, "b" stands for an 8-bit signed byte, while "B" stands for its unsigned equivalent. There are a few other predefined types, such as a signed 16-bit integer or a 32-bit floating-point number.

Copying raw bytes between these two arrays changes how bits are interpreted. However, it takes twice the amount of memory, which is quite wasteful. To perform such a bit rewriting in place, you can rely on the struct module, which uses a similar set of format characters for type declarations:

>>> from struct import pack, unpack

>>> unpack("BB", pack("bb", -42, 42))

(214, 42)

>>> bin(214)

'0b11010110'

Packing lets you lay objects in memory according to the given C data type specifiers. It returns a read-only bytes() object, which contains raw bytes of the resulting block of memory. Later, you can read back those bytes using a different set of type codes to change how they’re translated into Python objects.

Up to this point, you’ve used different techniques to obtain fixed-length bit strings of integers expressed in two’s complement representation. If you want to convert these types of bit sequences back to Python integers instead, then you can try this function:

def from_twos_complement(bit_string, num_bits=32):

unsigned = int(bit_string, 2)

sign_mask = 1 << (num_bits - 1) # For example 0b100000000

bits_mask = sign_mask - 1 # For example 0b011111111

return (unsigned & bits_mask) - (unsigned & sign_mask)

The function accepts a string composed of binary digits. First, it converts the digits to a plain unsigned integer, disregarding the sign bit. Next, it uses two bitmasks to extract the sign and magnitude bits, whose locations depend on the specified bit-length. Finally, it combines them using regular arithmetic, knowing that the value associated with the sign bit is negative.

You can try it out against the trusty old bit string from earlier examples:

>>> int("11010110", 2)

214

>>> from_twos_complement("11010110")

214

>>> from_twos_complement("11010110", num_bits=8)

-42

Python’s int() treats all the bits as the magnitude, so there are no surprises there. However, this new function assumes a 32-bit long string by default, which means the sign bit is implicitly equal to zero for shorter strings. When you request a bit-length that matches your bit string, then you’ll get the expected result.

While integer is the most appropriate data type for working with bitwise operators in most cases, you’ll sometimes need to extract and manipulate fragments of structured binary data, such as image pixels. The array and struct modules briefly touch upon this topic, so you’ll explore it in more detail next.

Seeing Data in Binary

You know how to read and interpret individual bytes. However, real-world data often consists of more than one byte to convey information. Take the float data type as an example. A single floating-point number in Python occupies as many as eight bytes in memory.

How do you see those bytes?

You can’t simply use bitwise operators because they don’t work with floating-point numbers:

>>> 3.14 & 0xff

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

TypeError: unsupported operand type(s) for &: 'float' and 'int'

You have to forget about the particular data type you’re dealing with and think of it in terms of a generic stream of bytes. That way, it won’t matter what the bytes represent outside the context of them being processed by the bitwise operators.

To get the bytes() of a floating-point number in Python, you can pack it using the familiar struct module:

>>> from struct import pack

>>> pack(">d", 3.14159)

b'@\t!\xf9\xf0\x1b\x86n'

Ignore the format characters passed through the first argument. They won’t make sense until you get to the byte order section below. Behind this rather obscure textual representation hides a list of eight integers:

>>> list(b"@\t!\xf9\xf0\x1b\x86n")

[64, 9, 33, 249, 240, 27, 134, 110]

Their values correspond to the subsequent bytes used to represent a floating-point number in binary. You can combine them to produce a very long bit string:

>>> from struct import pack

>>> "".join([f"{b:08b}" for b in pack(">d", 3.14159)])

'0100000000001001001000011111100111110000000110111000011001101110'

These 64 bits are the sign, exponent, and mantissa in double precision that you read about earlier. To synthesize a float from a similar bit string, you can reverse the process:

>>> from struct import unpack

>>> bits = "0100000000001001001000011111100111110000000110111000011001101110"

>>> unpack(

... ">d",

... bytes(int(bits[i:i+8], 2) for i in range(0, len(bits), 8))

... )

(3.14159,)

unpack() returns a tuple because it allows you to read more than one value at a time. For example, you could read the same bit string as four 16-bit signed integers:

>>> unpack(

... ">hhhh",

... bytes(int(bits[i:i+8], 2) for i in range(0, len(bits), 8))

... )

(16393, 8697, -4069, -31122)

As you can see, the way a bit string should be interpreted must be known up front to avoid ending up with garbled data. One important question you need to ask yourself is which end of the byte stream you should start reading from—left or right. Read on to find out.

Byte Order

There’s no dispute about the order of bits in a single byte. You’ll always find the least-significant bit at index zero and the most-significant bit at index seven, regardless of how they’re physically laid out in memory. The bitwise shift operators rely on this consistency.

However, there’s no consensus for the byte order in multibyte chunks of data. A piece of information comprising more than one byte can be read from left to right like an English text or from right to left like an Arabic one, for example. Computers see bytes in a binary stream like humans see words in a sentence.

It doesn’t matter which direction computers choose to read the bytes from as long as they apply the same rules everywhere. Unfortunately, different computer architectures use different approaches, which makes transferring data between them challenging.

Big-Endian vs Little-Endian

Let’s take a 32-bit unsigned integer corresponding to the number 196910, which was the year when Monty Python first appeared on TV. With all the leading zeros, it has the following binary representation 000000000000000000000111101100012.

How would you store such a value in computer memory?

If you imagine memory as a one-dimensional tape consisting of bytes, then you’d need to break that data down into individual bytes and arrange them in a contiguous block. Some find it natural to start from the left end because that’s how they read, while others prefer starting at the right end:

| Byte Order | Address N | Address N+1 | Address N+2 | Address N+3 |

|---|---|---|---|---|

| Big-Endian | 000000002 | 000000002 | 000001112 | 101100012 |

| Little-Endian | 101100012 | 000001112 | 000000002 | 000000002 |

When bytes are placed from left to right, the most-significant byte is assigned to the lowest memory address. This is known as the big-endian order. Conversely, when bytes are stored from right to left, the least-significant byte comes first. That’s called little-endian order.

Note: These humorous names draw inspiration from the eighteenth-century novel Gulliver’s Travels by Jonathan Swift. The author describes a conflict between the Little-Endians and the Big-Endians over the correct way to break the shell of a boiled egg. While Little-Endians prefer to start with the little pointy end, Big-Endians like the big end more.

Which way is better?

From a practical standpoint, there’s no real advantage of using one over the other. There might be some marginal gains in performance at the hardware level, but you won’t notice them. Major network protocols use the big-endian order, which allows them to filter data packets more quickly given the hierarchical design of IP addressing. Other than that, some people may find it more convenient to work with a particular byte order when debugging.

Either way, if you don’t get it right and mix up the two standards, then bad things start to happen:

>>> raw_bytes = (1969).to_bytes(length=4, byteorder="big")

>>> int.from_bytes(raw_bytes, byteorder="little")

2970025984

>>> int.from_bytes(raw_bytes, byteorder="big")

1969

When you serialize some value to a stream of bytes using one convention and try reading it back with another, you’ll get a completely useless result. This scenario is most likely when data is sent over a network, but you can also experience it when reading a local file in a specific format. For example, the header of a Windows bitmap always uses little-endian, while JPEG can use both byte orders.

Native Endianness

To find out your platform’s endianness, you can use the sys module:

>>> import sys

>>> sys.byteorder

'little'

You can’t change endianness, though, because it’s an intrinsic feature of your CPU architecture. It’s impossible to mock it for testing purposes without hardware virtualization such as QEMU, so even the popular VirtualBox won’t help.

Notably, the x86 family of processors from Intel and AMD, which power most modern laptops and desktops, are little-endian. Mobile devices are based on low-energy ARM architecture, which is bi-endian, while some older architectures such as the ancient Motorola 68000 were big-endian only.

For information on determining endianness in C, expand the box below.

Historically, the way to get your machine’s endianness in C was to declare a small integer and then read its first byte with a pointer:

#include <stdio.h>

#define BIG_ENDIAN "big"

#define LITTLE_ENDIAN "little"

char* byteorder() {

int x = 1;

char* pointer = (char*) &x; // Address of the 1st byte

return (*pointer > 0) ? LITTLE_ENDIAN : BIG_ENDIAN;

}

void main() {

printf("%s\n", byteorder());

}

If the value comes out higher than zero, then the byte stored at the lowest memory address must be the least-significant one.

Once you know the native endianness of your machine, you’ll want to convert between different byte orders when manipulating binary data. A universal way to do so, regardless of the data type at hand, is to reverse a generic bytes() object or a sequence of integers representing those bytes:

>>> big_endian = b"\x00\x00\x07\xb1"

>>> bytes(reversed(big_endian))

b'\xb1\x07\x00\x00'

However, it’s often more convenient to use the struct module, which lets you define standard C data types. In addition to this, it allows you to request a given byte order with an optional modifier:

>>> from struct import pack, unpack

>>> pack(">I", 1969) # Big-endian unsigned int

b'\x00\x00\x07\xb1'

>>> unpack("<I", b"\x00\x00\x07\xb1") # Little-endian unsigned int

(2970025984,)

The greater-than sign (>) indicates that bytes are laid out in the big-endian order, while the less-than symbol (<) corresponds to little-endian. If you don’t specify one, then native endianness is assumed. There are a few more modifiers, like the exclamation mark (!), which signifies the network byte order.

Network Byte Order

Computer networks are made of heterogeneous devices such as laptops, desktops, tablets, smartphones, and even light bulbs equipped with a Wi-Fi adapter. They all need agreed-upon protocols and standards, including the byte order for binary transmission, to communicate effectively.

At the dawn of the Internet, it was decided that the byte order for those network protocols would be big-endian.

Programs that want to communicate over a network can grab the classic C API, which abstracts away the nitty-gritty details with a socket layer. Python wraps that API through the built-in socket module. However, unless you’re writing a custom binary protocol, you’ll probably want to take advantage of an even higher-level abstraction, such as the HTTP protocol, which is text-based.

Where the socket module can be useful is in the byte order conversion. It exposes a few functions from the C API, with their distinctive, tongue-twisting names:

>>> from socket import htons, htonl, ntohs, ntohl

>>> htons(1969) # Host to network (short int)

45319

>>> htonl(1969) # Host to network (long int)

2970025984

>>> ntohs(45319) # Network to host (short int)

1969

>>> ntohl(2970025984) # Network to host (long int)

1969

If your host already uses the big-endian byte order, then there’s nothing to be done. The values will remain the same.

Bitmasks

A bitmask works like a graffiti stencil that blocks the paint from being sprayed on particular areas of a surface. It lets you isolate the bits to apply some function on them selectively. Bitmasking involves both the bitwise logical operators and the bitwise shift operators that you’ve read about.

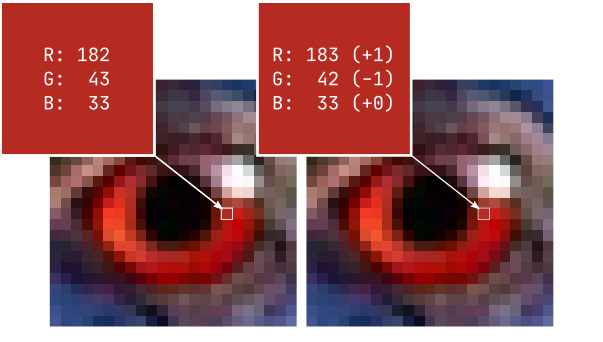

You can find bitmasks in a lot of different contexts. For example, the subnet mask in IP addressing is actually a bitmask that helps you extract the network address. Pixel channels, which correspond to the red, green, and blue colors in the RGB model, can be accessed with a bitmask. You can also use a bitmask to define Boolean flags that you can then pack on a bit field.

There are a few common types of operations associated with bitmasks. You’ll take a quick look at some of them below.

Getting a Bit

To read the value of a particular bit on a given position, you can use the bitwise AND against a bitmask composed of only one bit at the desired index:

>>> def get_bit(value, bit_index):

... return value & (1 << bit_index)

...

>>> get_bit(0b10000000, bit_index=5)

0

>>> get_bit(0b10100000, bit_index=5)

32

The mask will suppress all bits except for the one that you’re interested in. It’ll result in either zero or a power of two with an exponent equal to the bit index. If you’d like to get a simple yes-or-no answer instead, then you could shift to the right and check the least-significant bit:

>>> def get_normalized_bit(value, bit_index):

... return (value >> bit_index) & 1

...

>>> get_normalized_bit(0b10000000, bit_index=5)

0

>>> get_normalized_bit(0b10100000, bit_index=5)

1

This time, it will normalize the bit value so that it never exceeds one. You could then use that function to derive a Boolean True or False value rather than a numeric value.

Setting a Bit

Setting a bit is similar to getting one. You take advantage of the same bitmask as before, but instead of using bitwise AND, you use the bitwise OR operator:

>>> def set_bit(value, bit_index):

... return value | (1 << bit_index)

...

>>> set_bit(0b10000000, bit_index=5)

160

>>> bin(160)

'0b10100000'

The mask retains all the original bits while enforcing a binary one at the specified index. Had that bit already been set, its value wouldn’t have changed.

Unsetting a Bit

To clear a bit, you want to copy all binary digits while enforcing zero at one specific index. You can achieve this effect by using the same bitmask once again, but in the inverted form:

>>> def clear_bit(value, bit_index):

... return value & ~(1 << bit_index)

...

>>> clear_bit(0b11111111, bit_index=5)

223

>>> bin(223)

'0b11011111'

Using the bitwise NOT on a positive number always produces a negative value in Python. While this is generally undesirable, it doesn’t matter here because you immediately apply the bitwise AND operator. This, in turn, triggers the mask’s conversion to two’s complement representation, which gets you the expected result.

Toggling a Bit

Sometimes it’s useful to be able to toggle a bit on and off again periodically. That’s a perfect opportunity for the bitwise XOR operator, which can flip your bit like that:

>>> def toggle_bit(value, bit_index):

... return value ^ (1 << bit_index)

...

>>> x = 0b10100000

>>> for _ in range(5):

... x = toggle_bit(x, bit_index=7)

... print(bin(x))

...

0b100000

0b10100000

0b100000

0b10100000

0b100000